Music producers don’t have a volume problem they have a discovery efficiency problem.

Modern sample libraries contain thousands of sounds, but finding the right one often interrupts creative momentum.

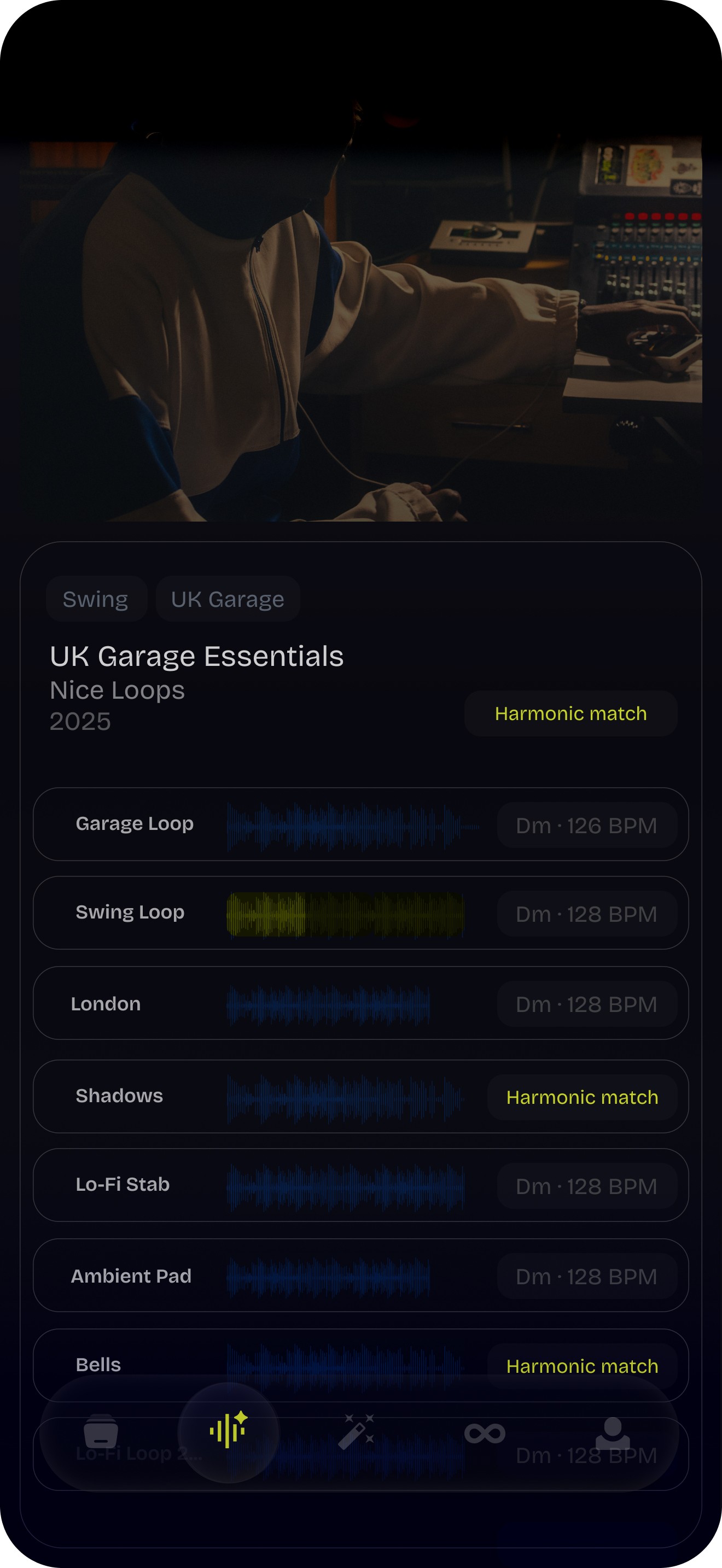

loophaven explores an audio-first discovery system that replaces text-based search with sound-to-sound interaction and instant cloud-to-VST synchronization, enabling producers to discover, audition, and use sounds without leaving their creative flow.

∞haven

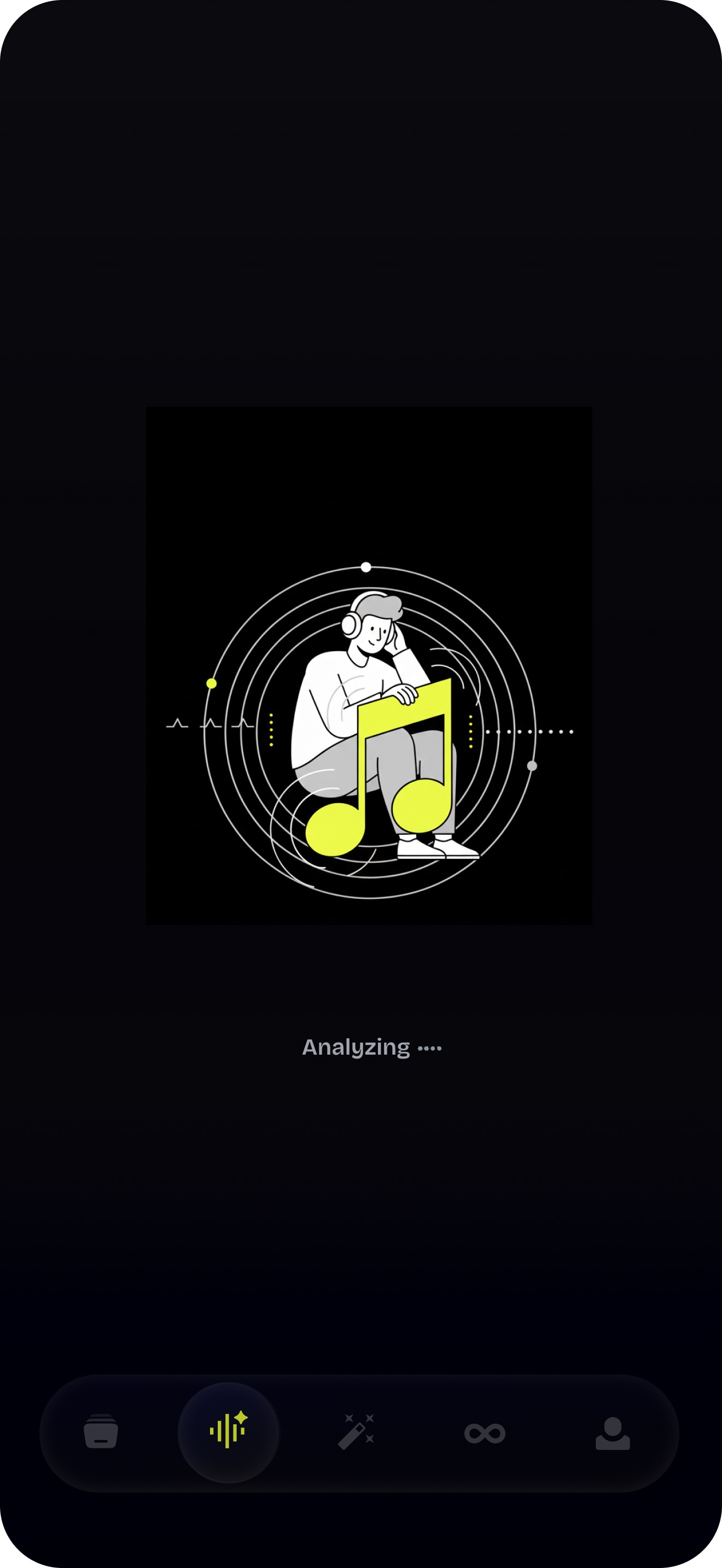

LoopHaven supports discovery as part of music creation, not just a prep step. Producers don’t need to define intent upfront. The system reacts to audio input, letting ideas grow through listening and iteration.

This shift moves discovery from language searches to sound-based interaction. It prioritizes momentum over precision, reducing the friction that disrupts a creator’s flow.

∞haven

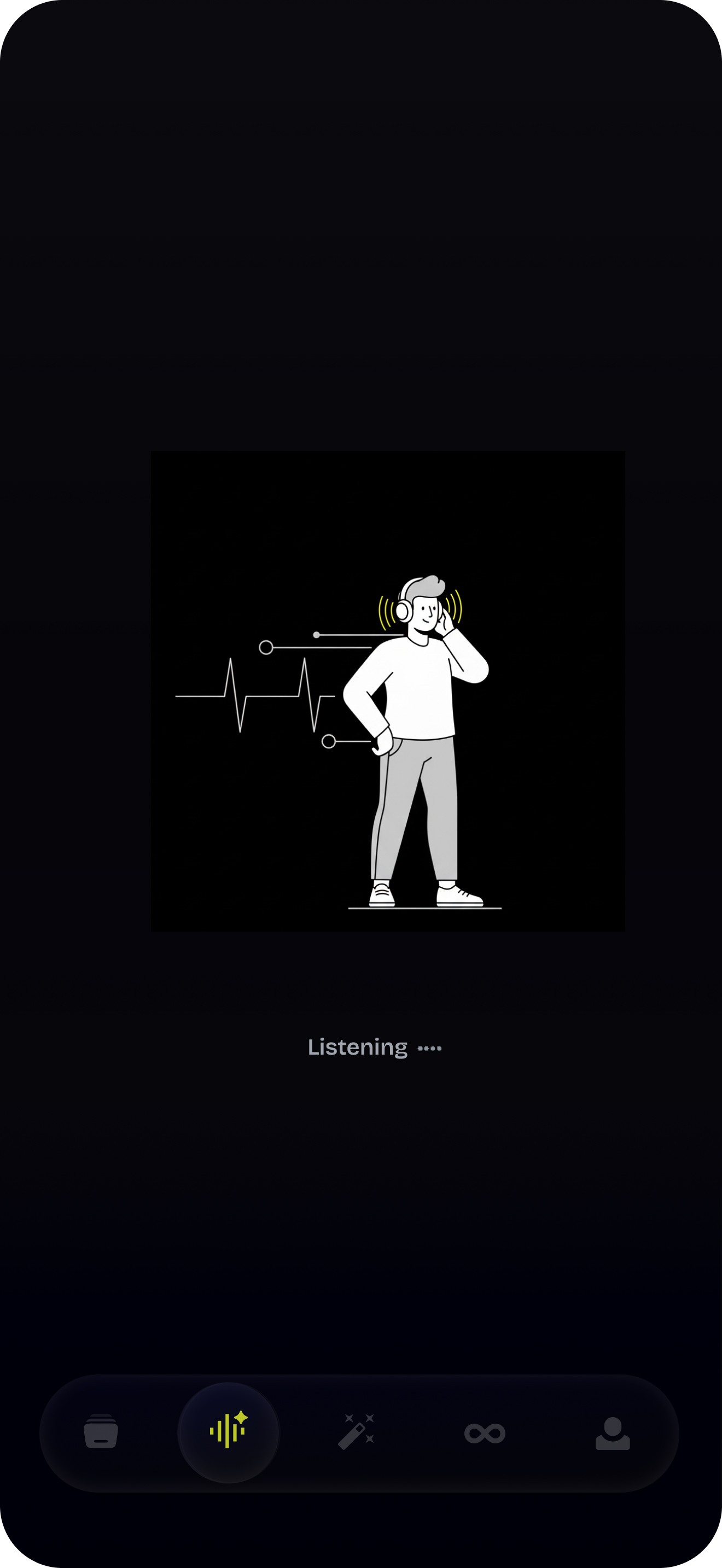

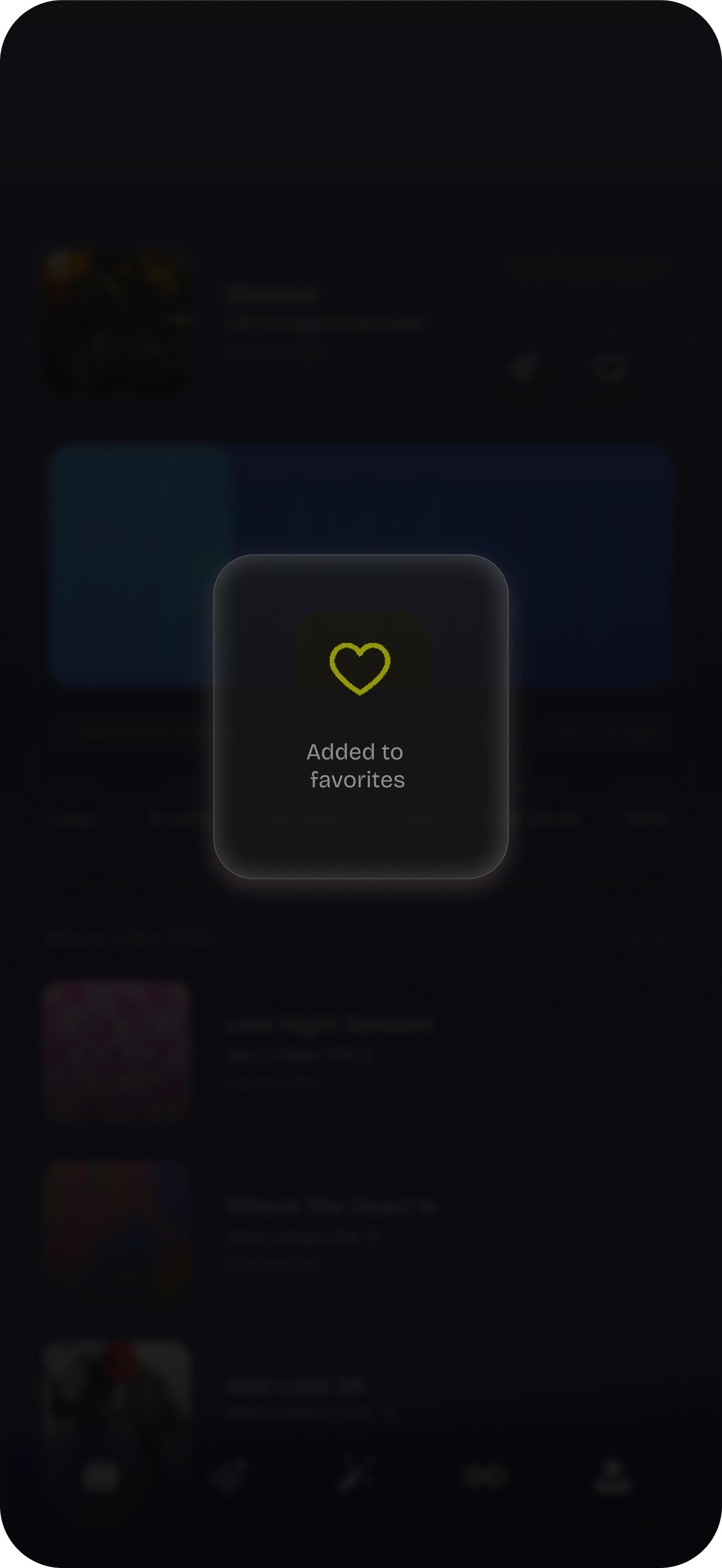

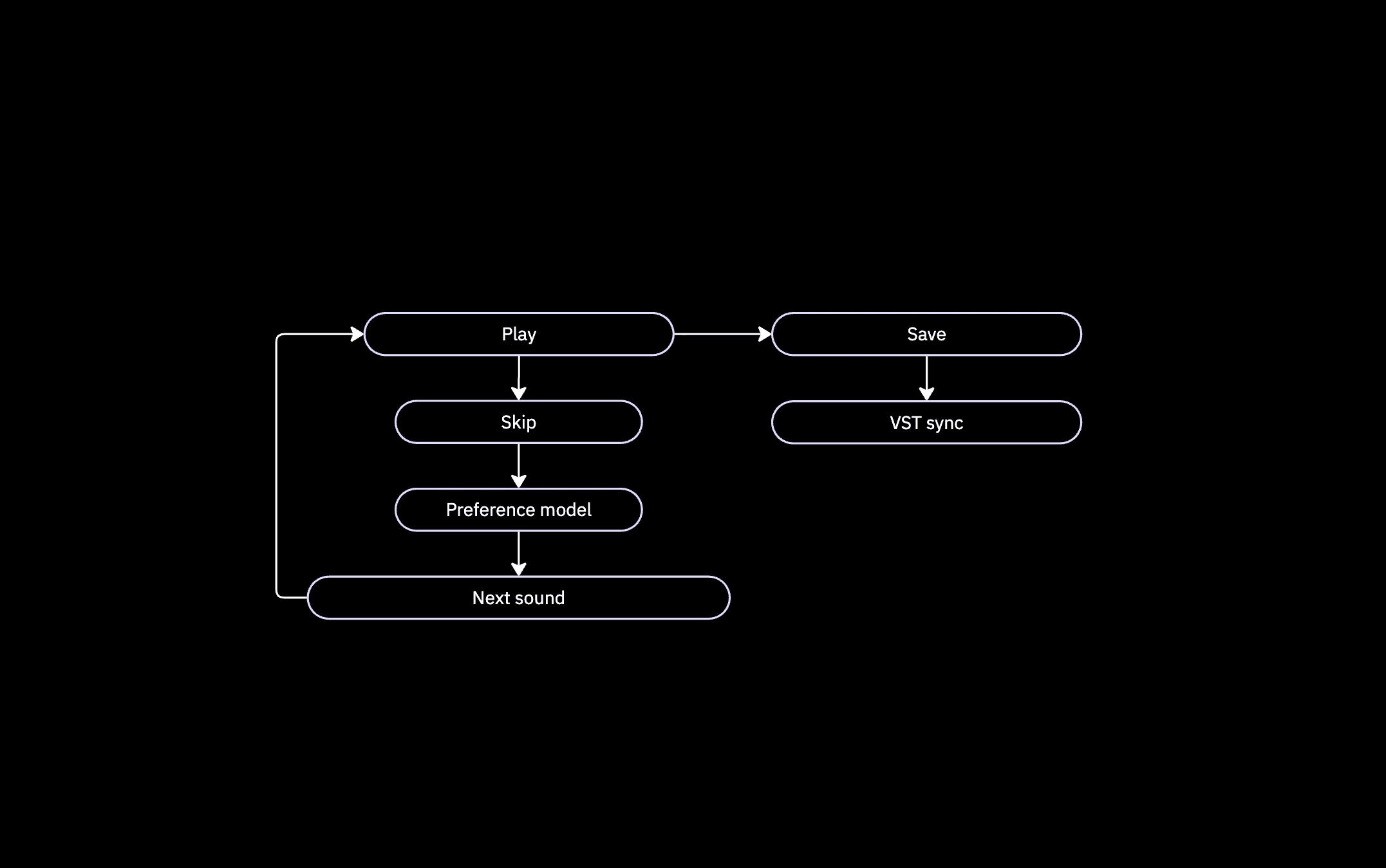

Radio mode removes decision-making from discovery.

Simple interactions such as Like or Skip update the preference model in real time, shaping what plays next while keeping attention focused on listening rather than interface.

Radio mode takes away decision-making during discovery.

Simple actions like Like or Skip change the preference model instantly. This shapes what plays next and helps you stay focused on listening instead of the interface.

Radio mode takes away decision-making during discovery.

Simple actions like Like or Skip change the preference model instantly. This shapes what plays next and helps you stay focused on listening instead of the interface.

Radio mode is designed for moments when producers do not yet have a clear direction — only the desire to keep creating.

The system plays a continuous stream of musically compatible sounds based on listening behavior and production history. Discovery occurs passively, without filters, categories, or manual input.

Radio mode helps producers when they lack a clear direction but still want to create.

The system streams compatible sounds based on listening habits and production history. Discovery happens passively, with no filters, categories, or manual input.

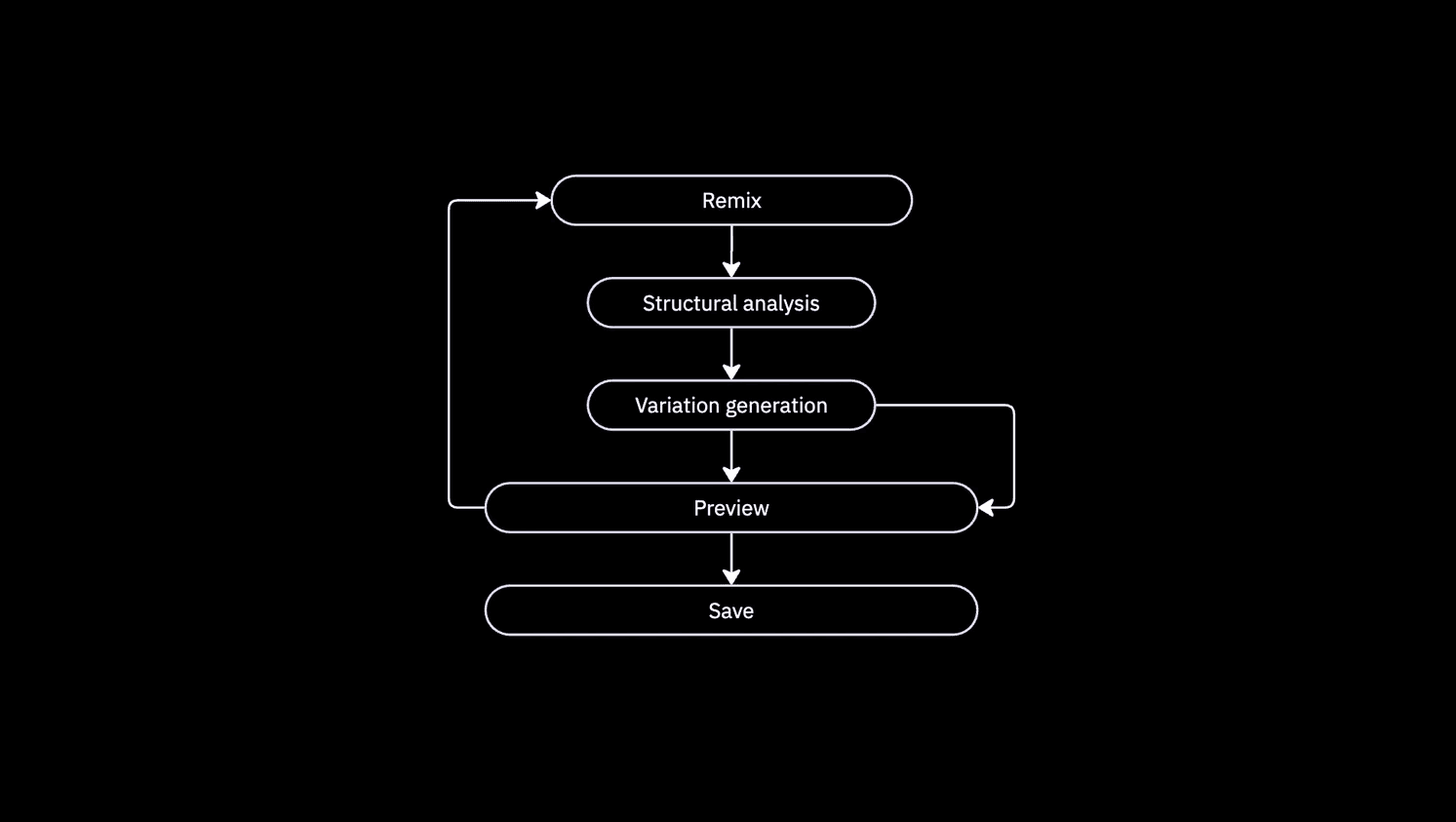

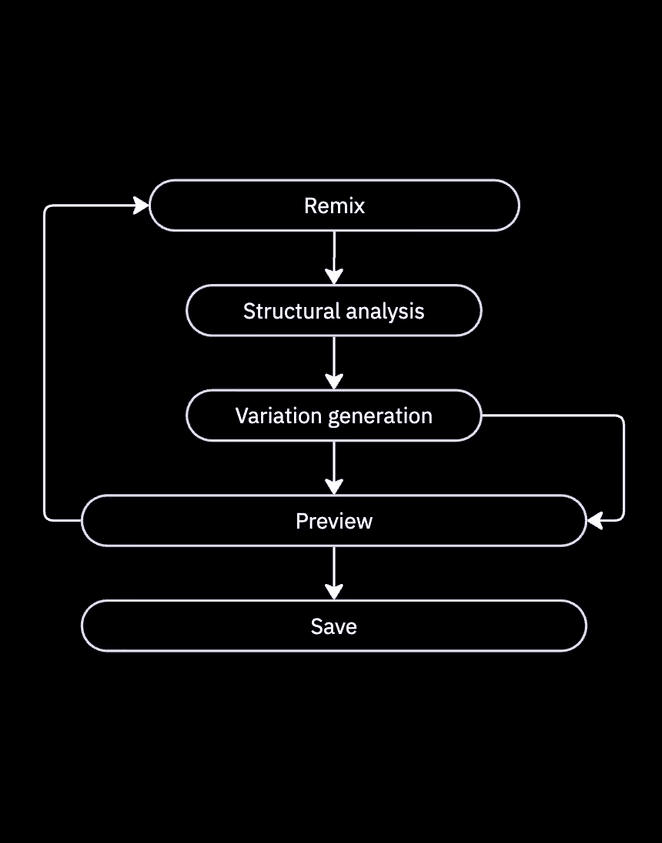

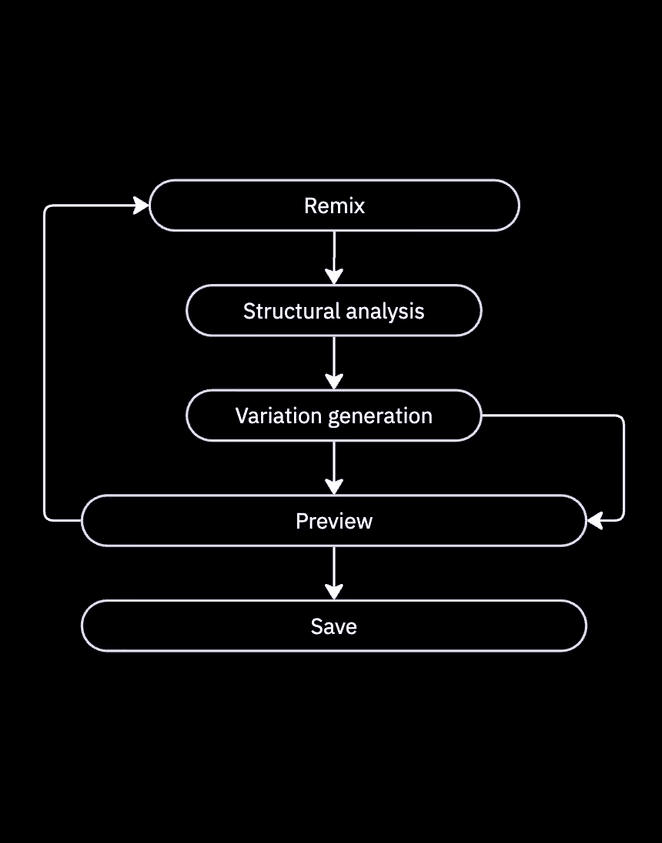

Remix mode lets you explore ideas in new ways. Instead of just replacing sounds, producers create variations. These variations keep the musical identity while changing timing, rhythm, and phrasing.

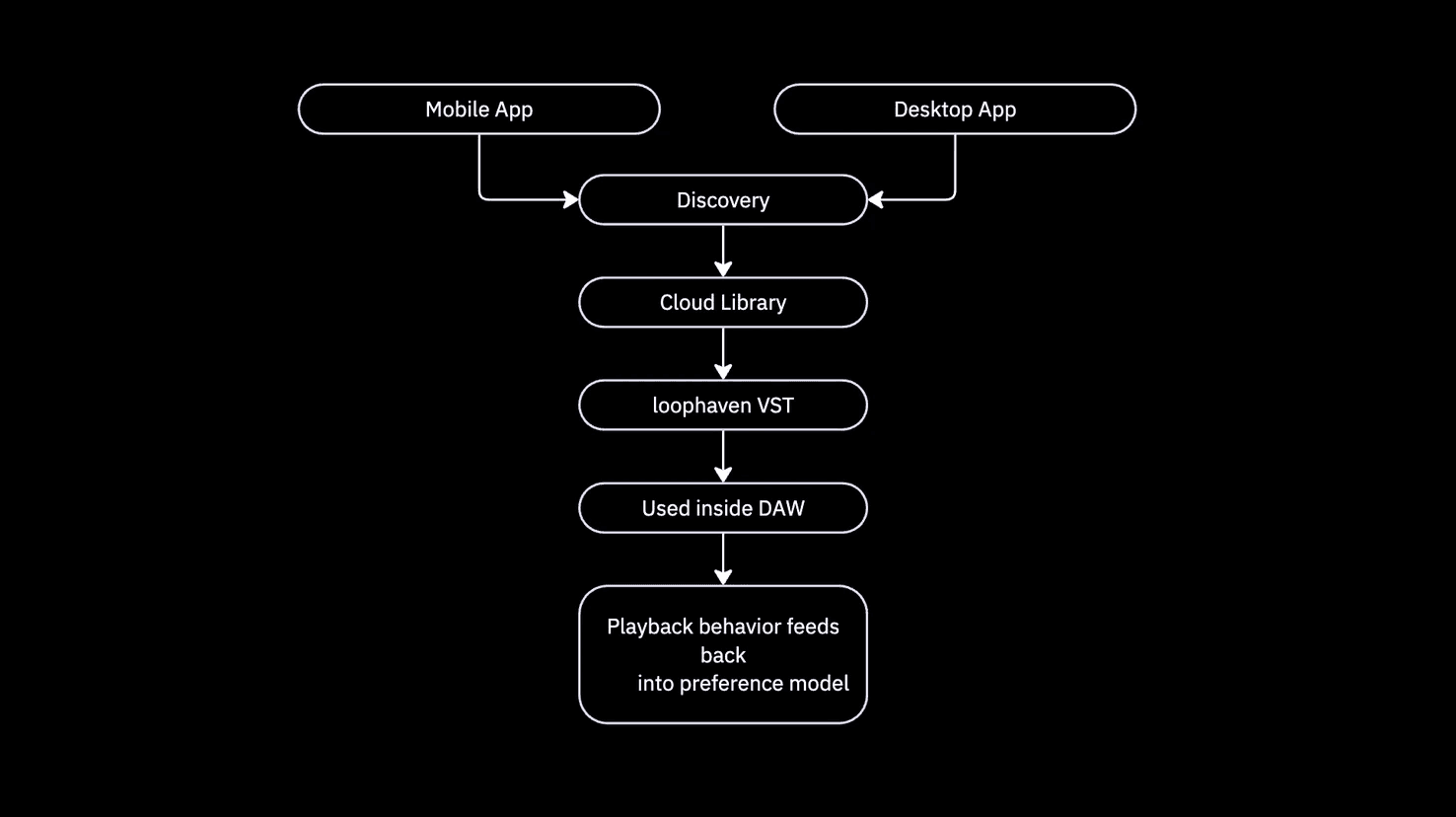

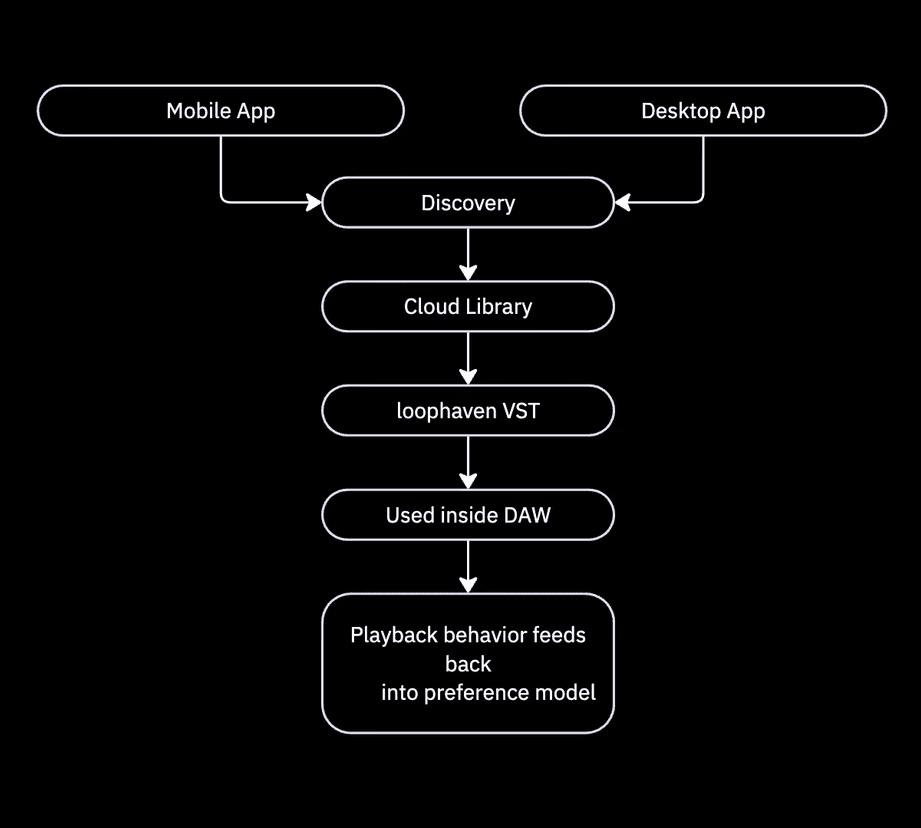

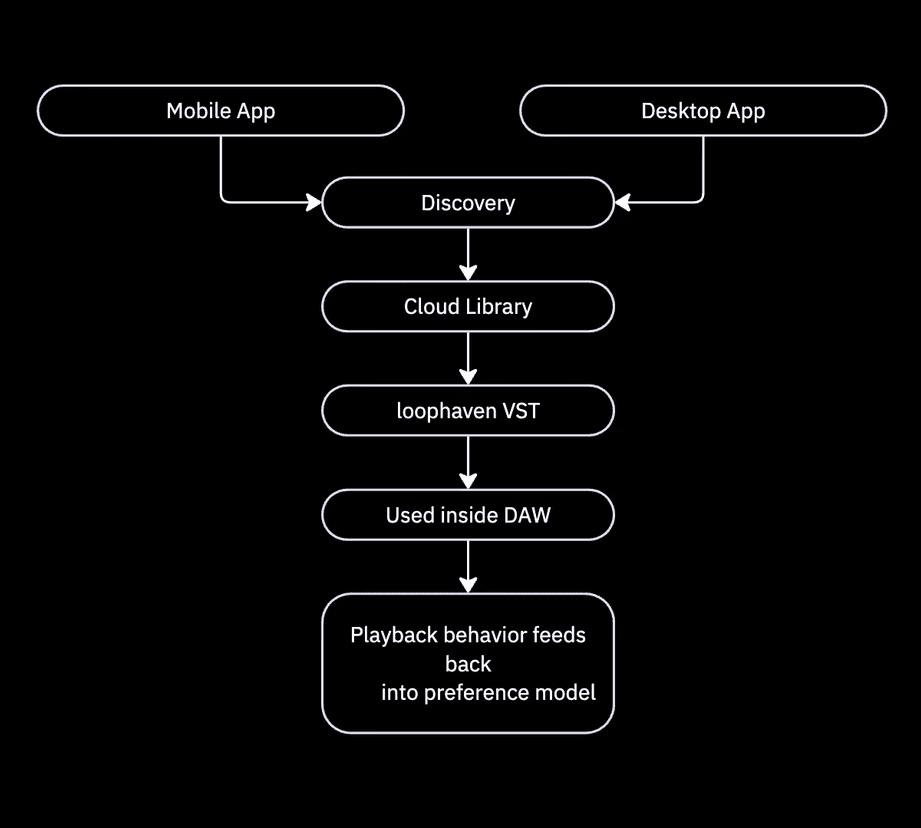

LoopHaven works as a connected system across mobile, desktop, and plugin platforms.

All discovery actions feed into a shared preference model stored in the cloud. When a sound is saved on any device, it’s instantly available in the LoopHaven VST plugin. This means no manual downloads, file management, or importing workflows are needed.

This setup lets discovery happen anywhere while keeping usage focused on the production environment.

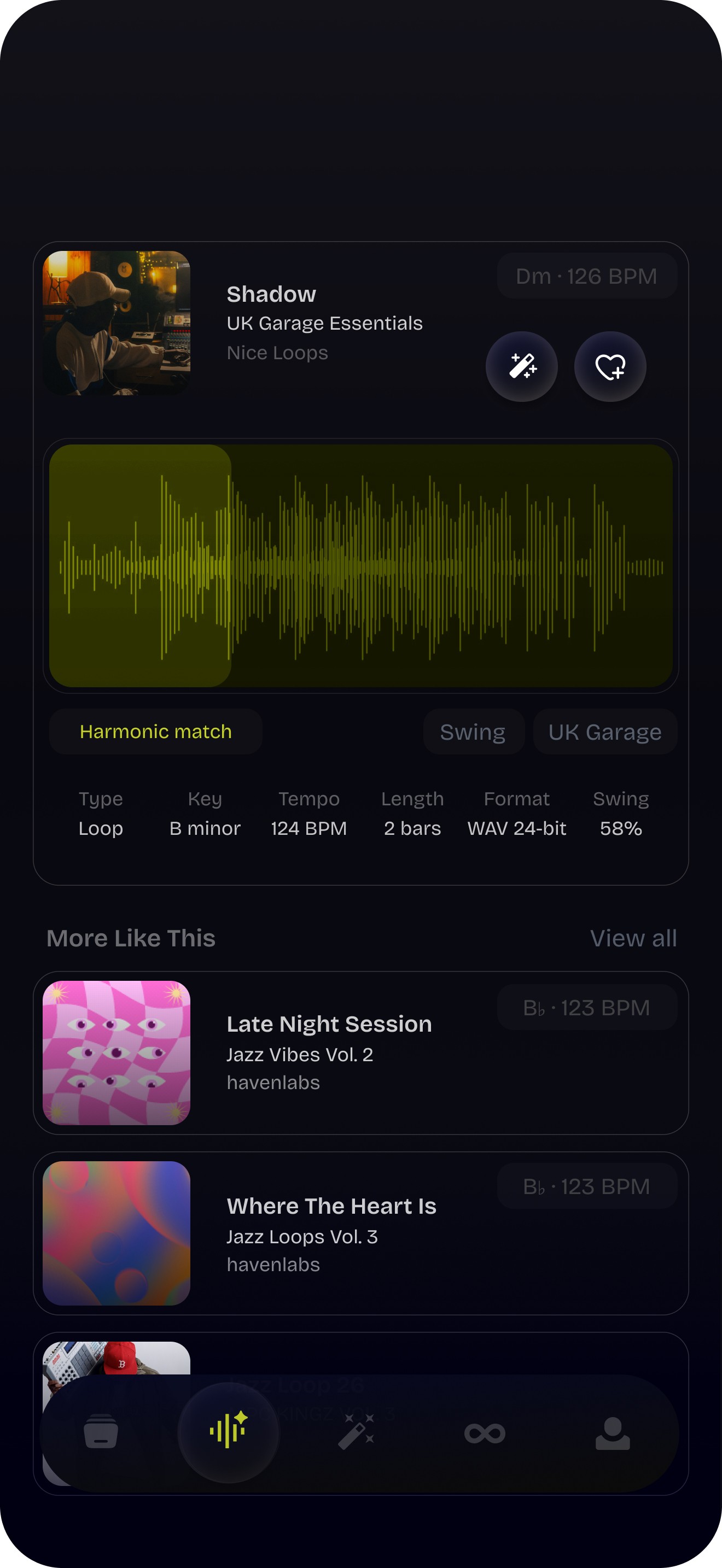

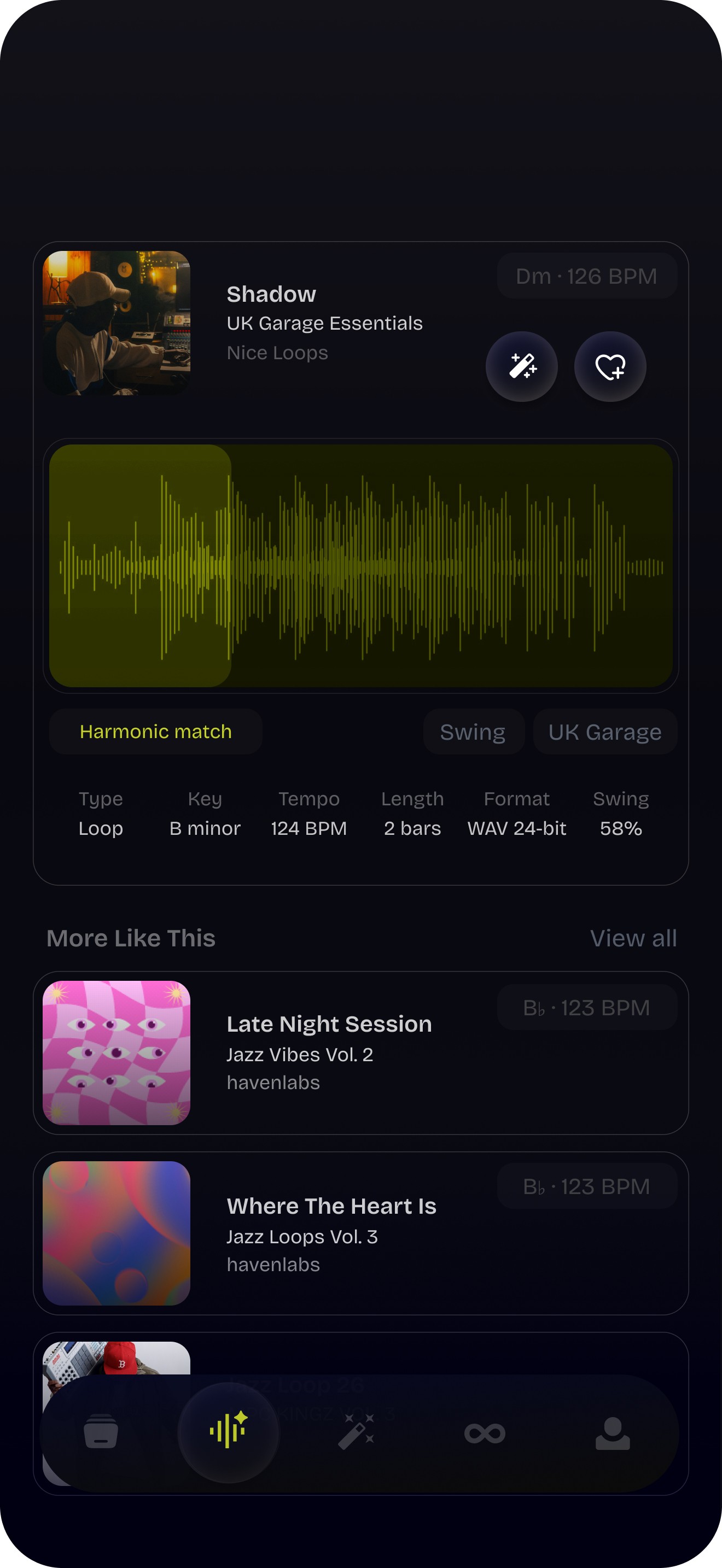

To assess the direction before system expansion, I validated the core interaction model. This involved early concept testing and internal critique sessions with producers and designers.

What was tested:

Sound-to-sound discovery versus traditional tag-based browsing.

Time to first usable sound from a cold start.

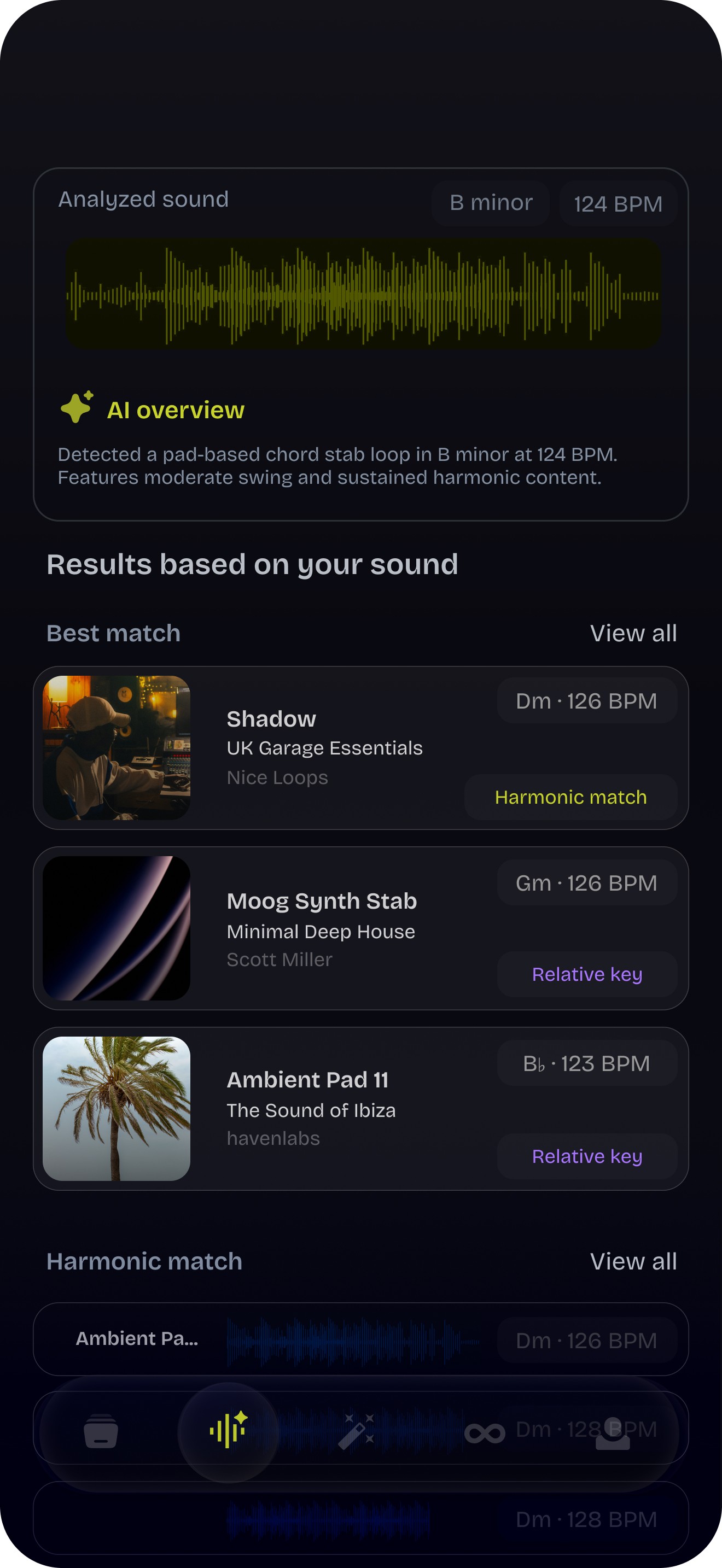

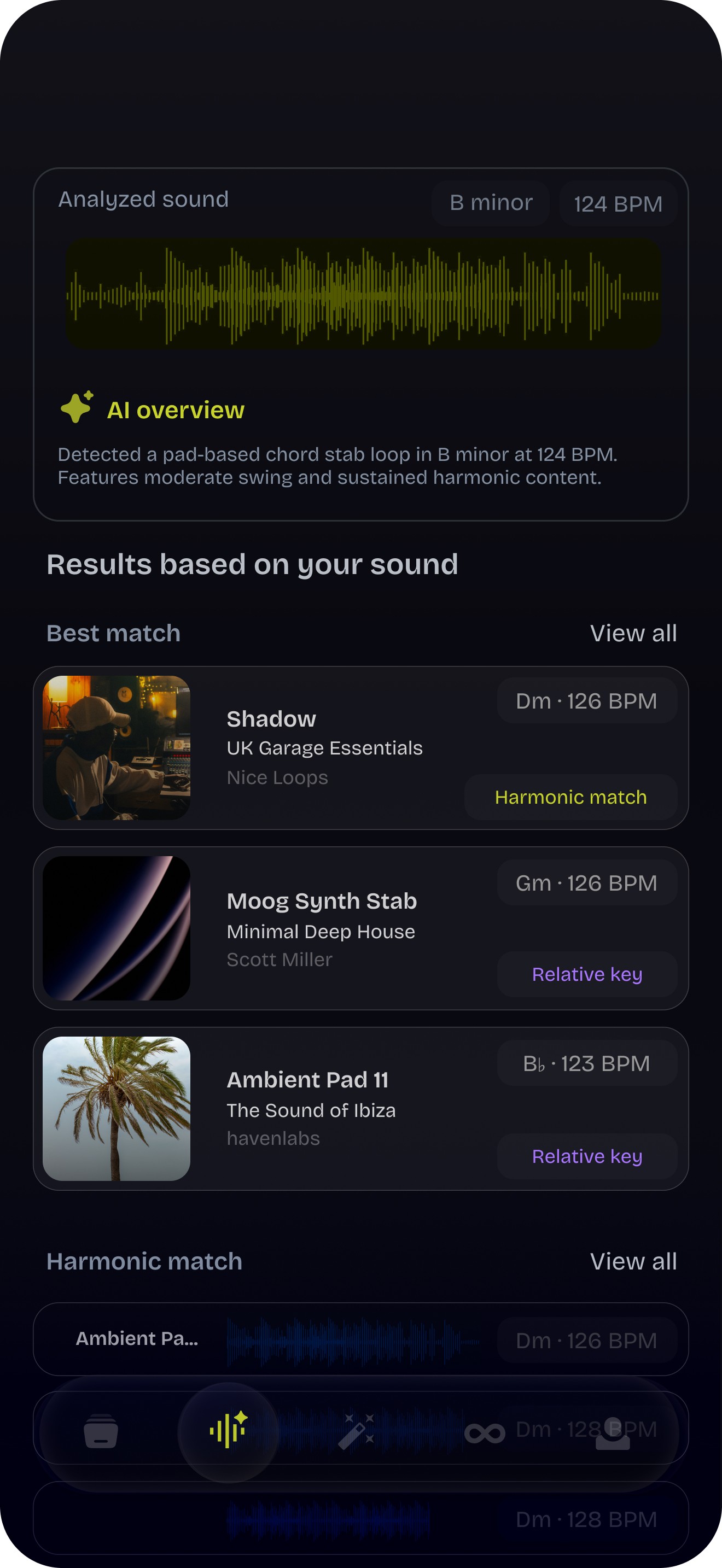

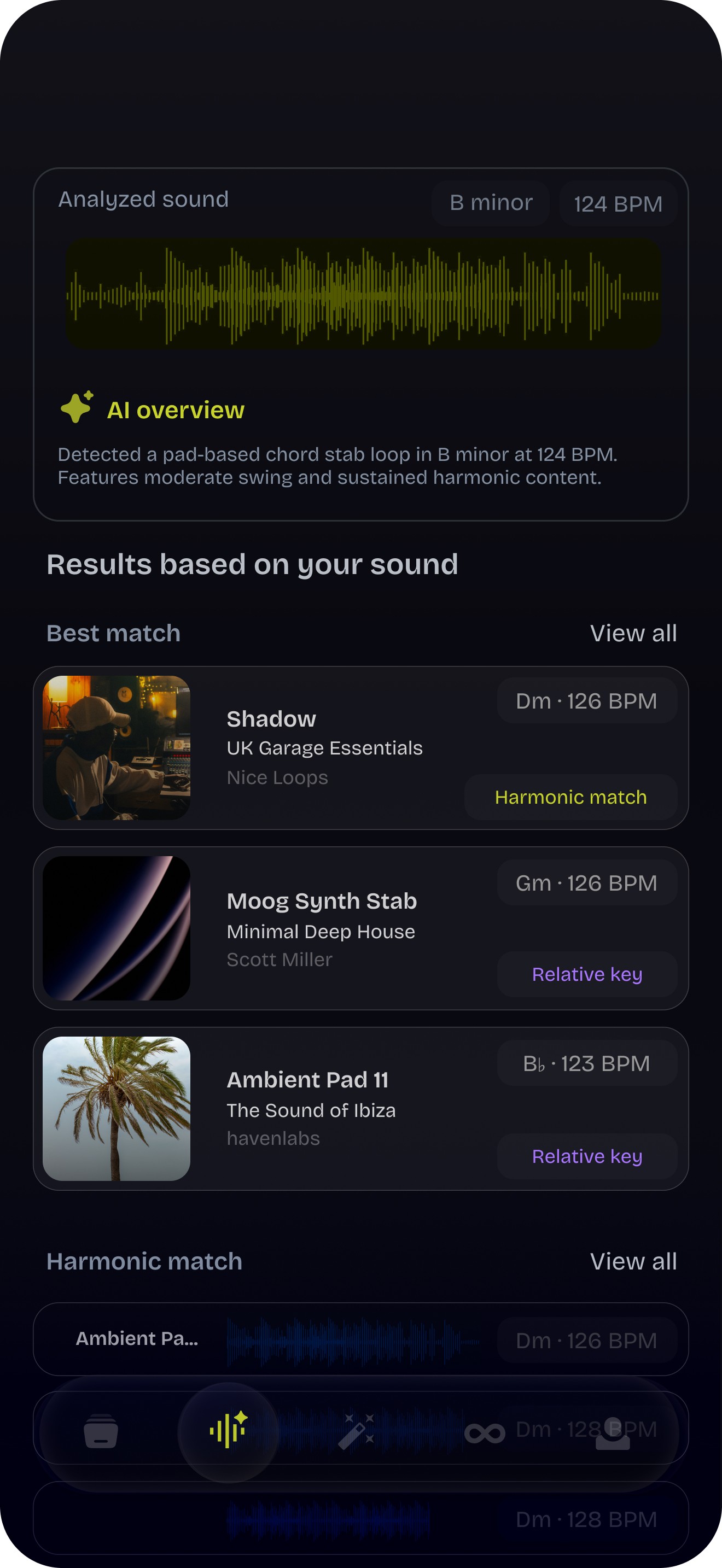

Clarity of AI output without needing technical explanations.

Key signals observed:

Producers preferred discovering sounds through audio instead of filters or keywords.

Participants found the experience “faster” and “less interruptive” than browsing folders.

The AI overview worked best when using musical terms (like key, tempo, and role) instead of abstract ML language.

Resulting iterations:

Reduced visible filters in early discovery stages.

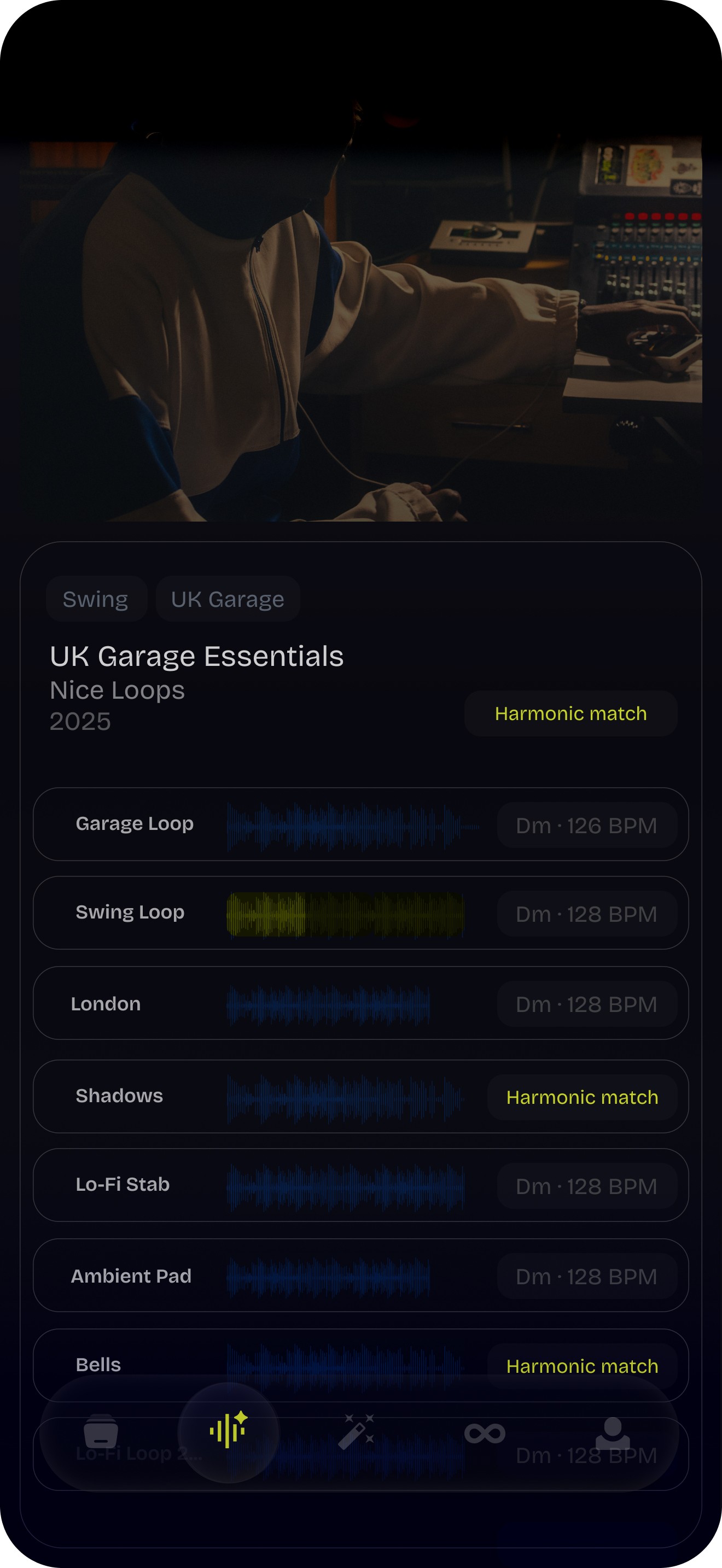

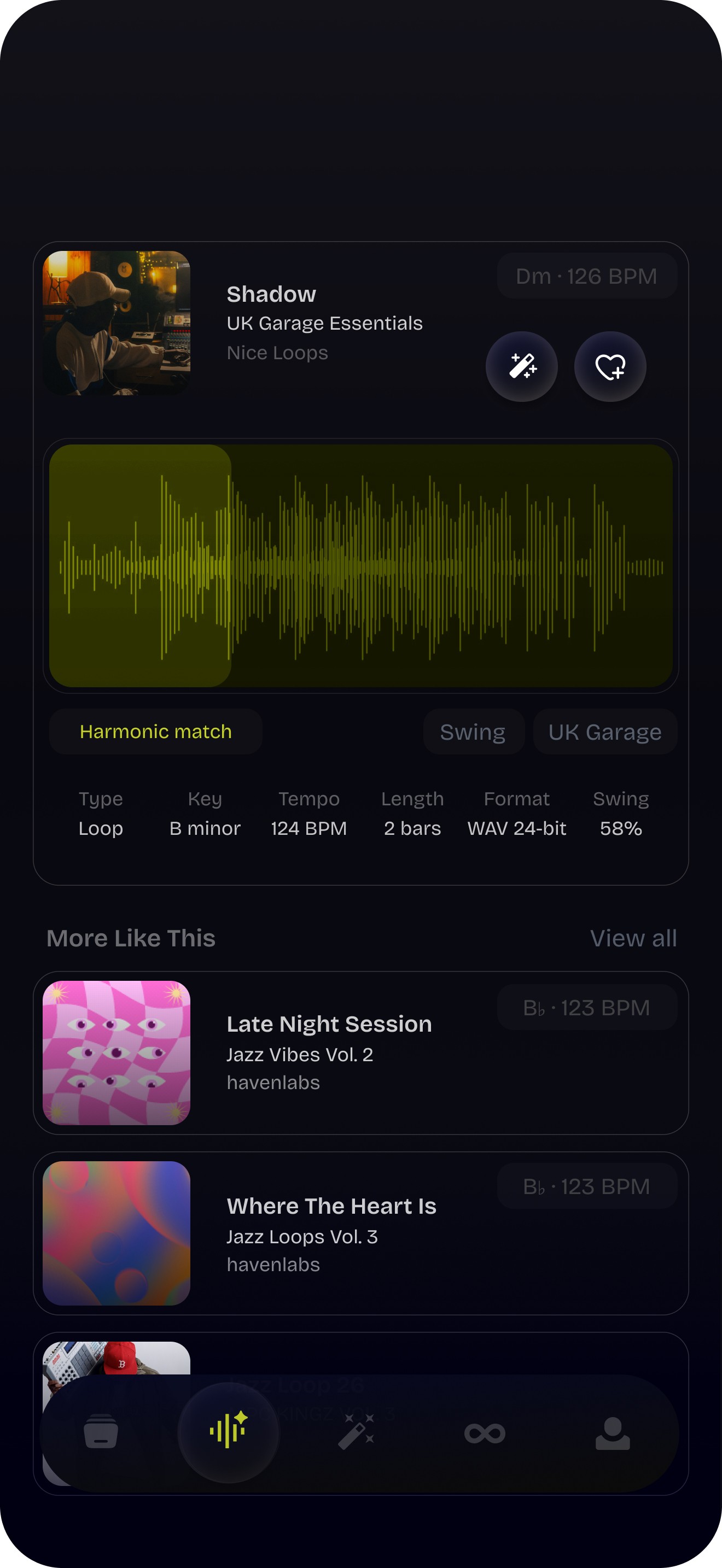

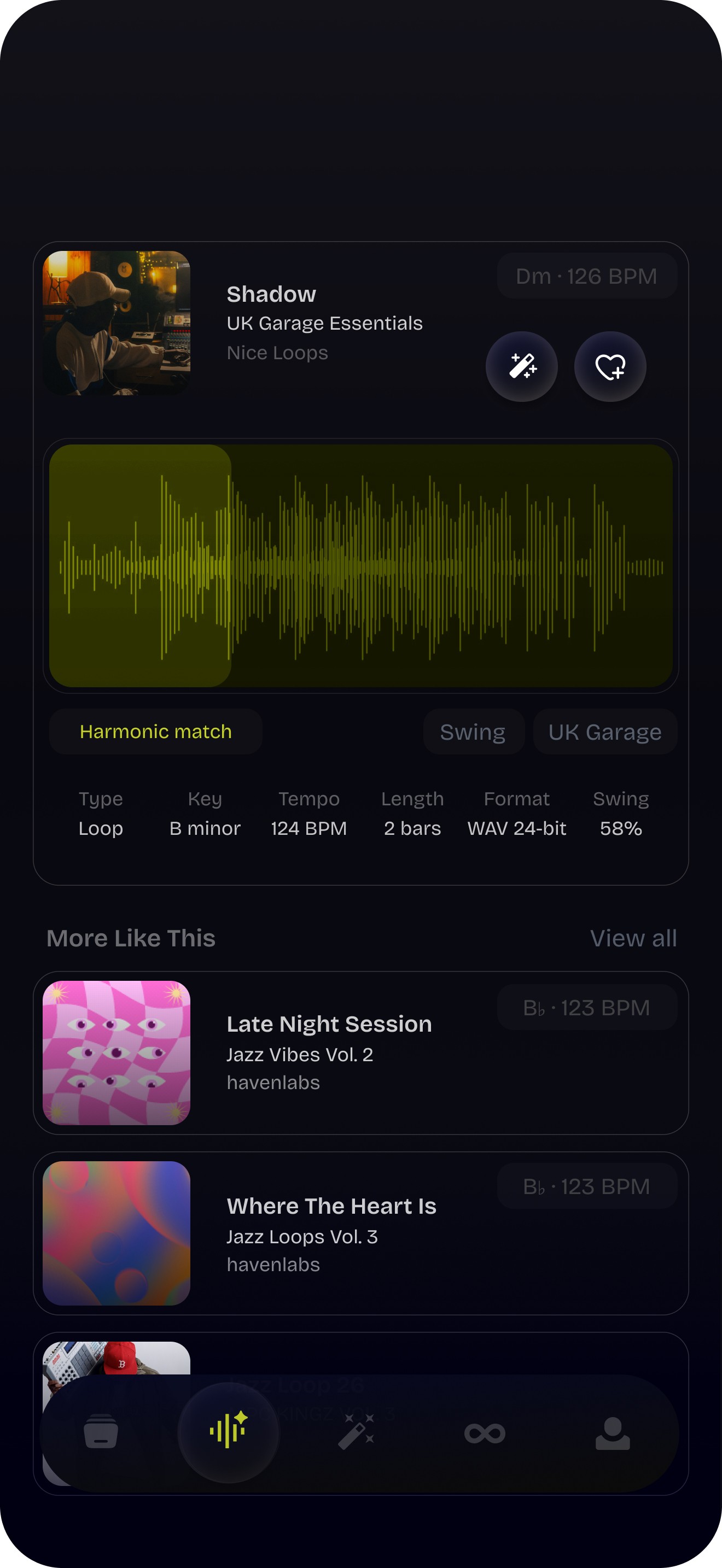

Focused on harmonic compatibility instead of exact similarity scoring.

Introduced the AI Overview card to build trust without overwhelming users.

These insights reinforced the core hypothesis: momentum is more important than precision during active music creation.

Early exploration looked at how producers find sounds while working, not how tools think they should search.

Research showed that discovery often occurs during composition. At this stage, creative intent is flexible and hard to express. Keyword searches, filters, and folders need clear intent when producers are thinking intuitively.

This gap between how producers create and how traditional tools work often leads to stalled sessions, abandoned ideas, and extra mental strain.

When viewed holistically, the market reveals a shared gap:

Across platforms, several consistent behaviors emerged:

Discovery mainly relies on text-based metadata like tags, filters, and folders.

Musical intent often shows through language instead of sound.

Discovery, analysis, and usage usually happen in different environments.

File-based workflows dominate how sounds move into the DAW.

Learning systems, when present, are often limited to browsing behavior, not production context.

To understand how producers find and use sounds, we looked at workflows on established platforms and new tools, including:

We aimed to spot common patterns in the ecosystem, not to assess individual products.

Across platforms, several consistent behaviors emerged:

Discovery mainly relies on text-based metadata like tags, filters, and folders.

Musical intent often shows through language instead of sound.

Discovery, analysis, and usage usually happen in different environments.

File-based workflows dominate how sounds move into the DAW.

Learning systems, when present, are often limited to browsing behavior, not production context.

When viewed holistically, the market reveals a shared gap:

Instead of replacing tools or competing on library size, it focuses on:

audio-first discovery, not keyword search

musical compatibility, not categorical similarity

continuous flow, not discrete steps

cloud-synced usage, not file management

Its role is to connect discovery and production intelligently.

The opportunity came from looking at how producers use different tools, not just individual platforms.

LoopHaven tackles friction at the system level, where discovery, intelligence, and execution meet.

Early exploration looked at how producers find sounds while working, not how tools think they should search.

Research showed that discovery often occurs during composition. At this stage, creative intent is flexible and hard to express. Keyword searches, filters, and folders need clear intent when producers are thinking intuitively.

This gap between how producers create and how traditional tools work often leads to stalled sessions, abandoned ideas, and extra mental strain.

To assess the direction before system expansion, I validated the core interaction model. This involved early concept testing and internal critique sessions with producers and designers.

What was tested:

Sound-to-sound discovery versus traditional tag-based browsing.

Time to first usable sound from a cold start.

Clarity of AI output without needing technical explanations.

Key signals observed:

Producers preferred discovering sounds through audio instead of filters or keywords.

Participants found the experience “faster” and “less interruptive” than browsing folders.

The AI overview worked best when using musical terms (like key, tempo, and role) instead of abstract ML language.

Resulting iterations:

Reduced visible filters in early discovery stages.

Focused on harmonic compatibility instead of exact similarity scoring.

Introduced the AI Overview card to build trust without overwhelming users.

These insights reinforced the core hypothesis: momentum is more important than precision during active music creation.

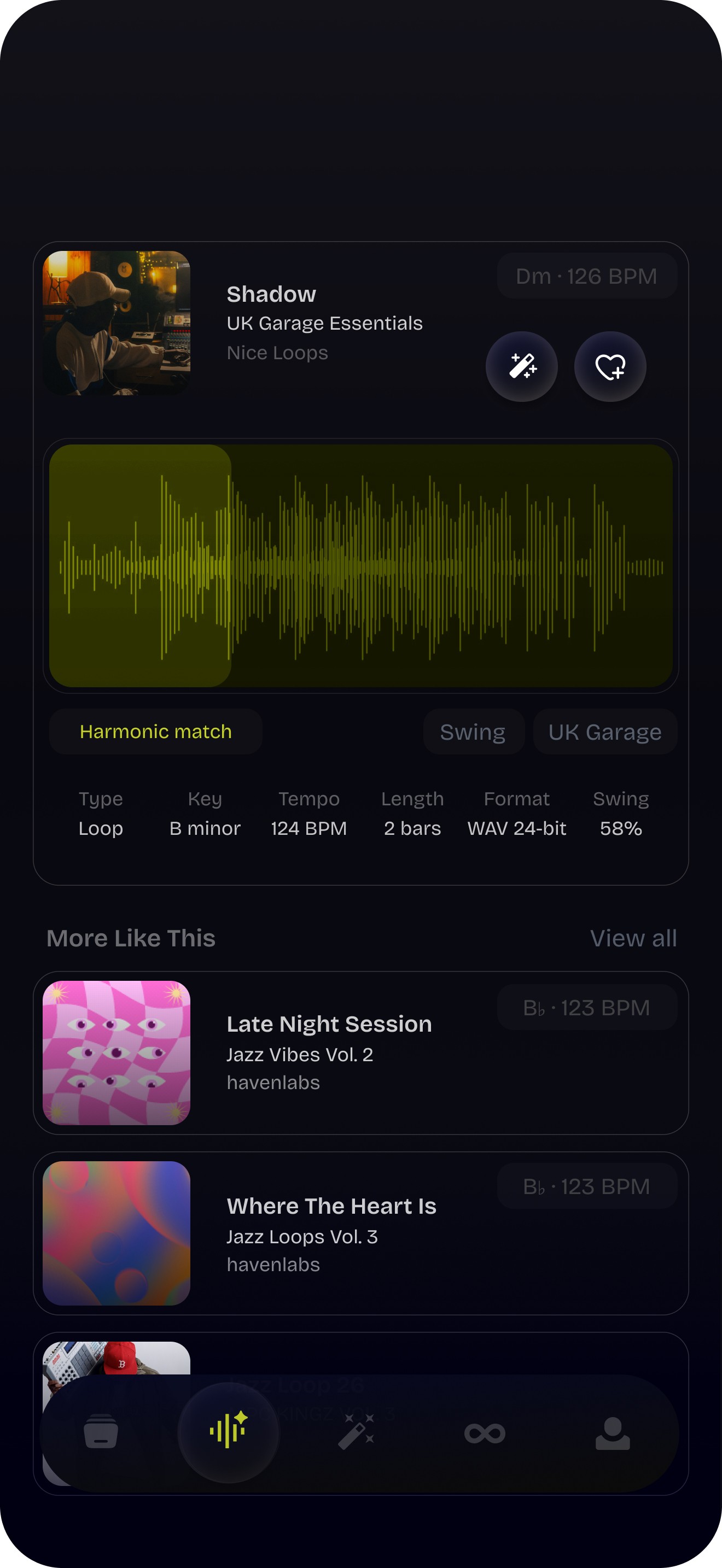

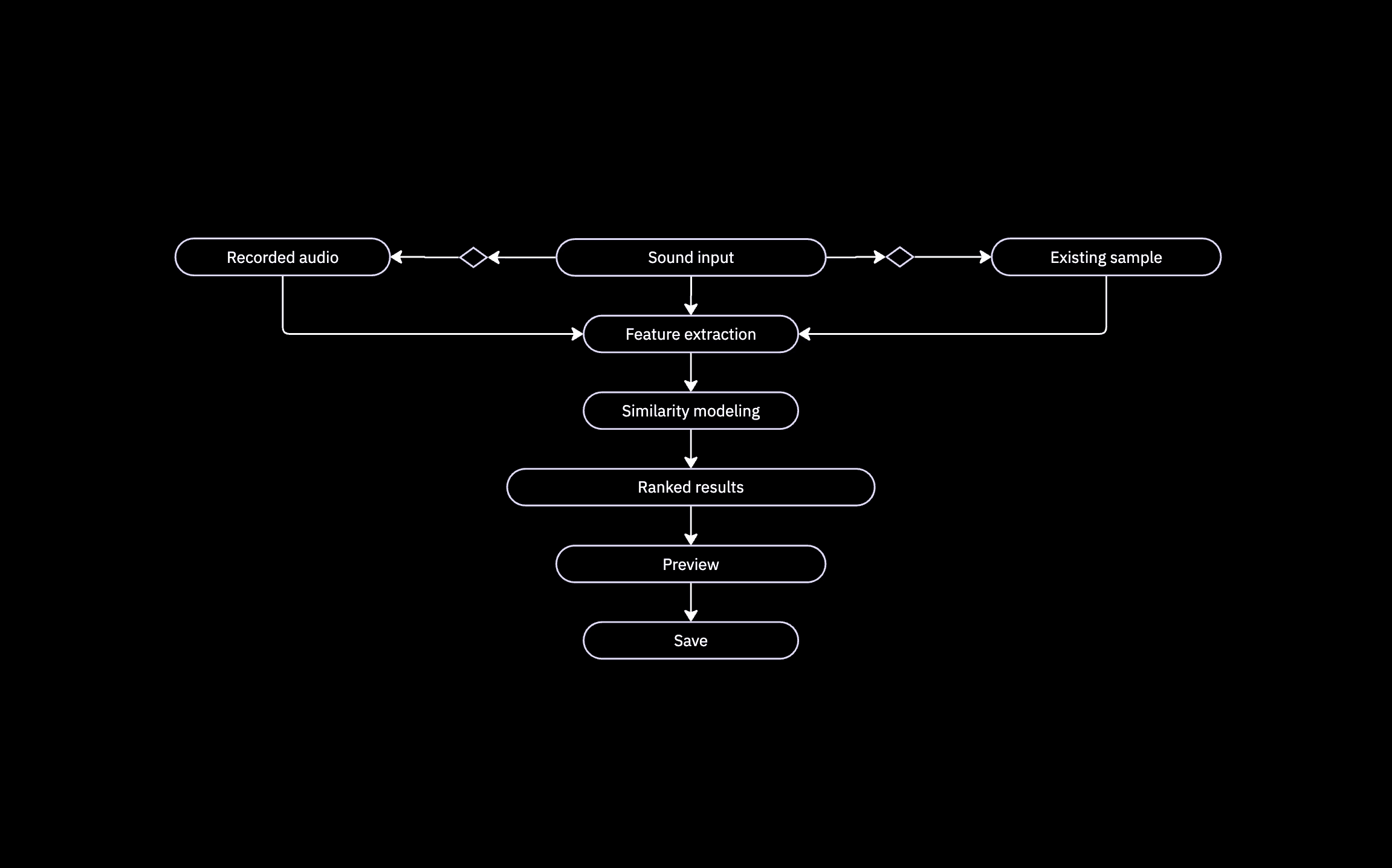

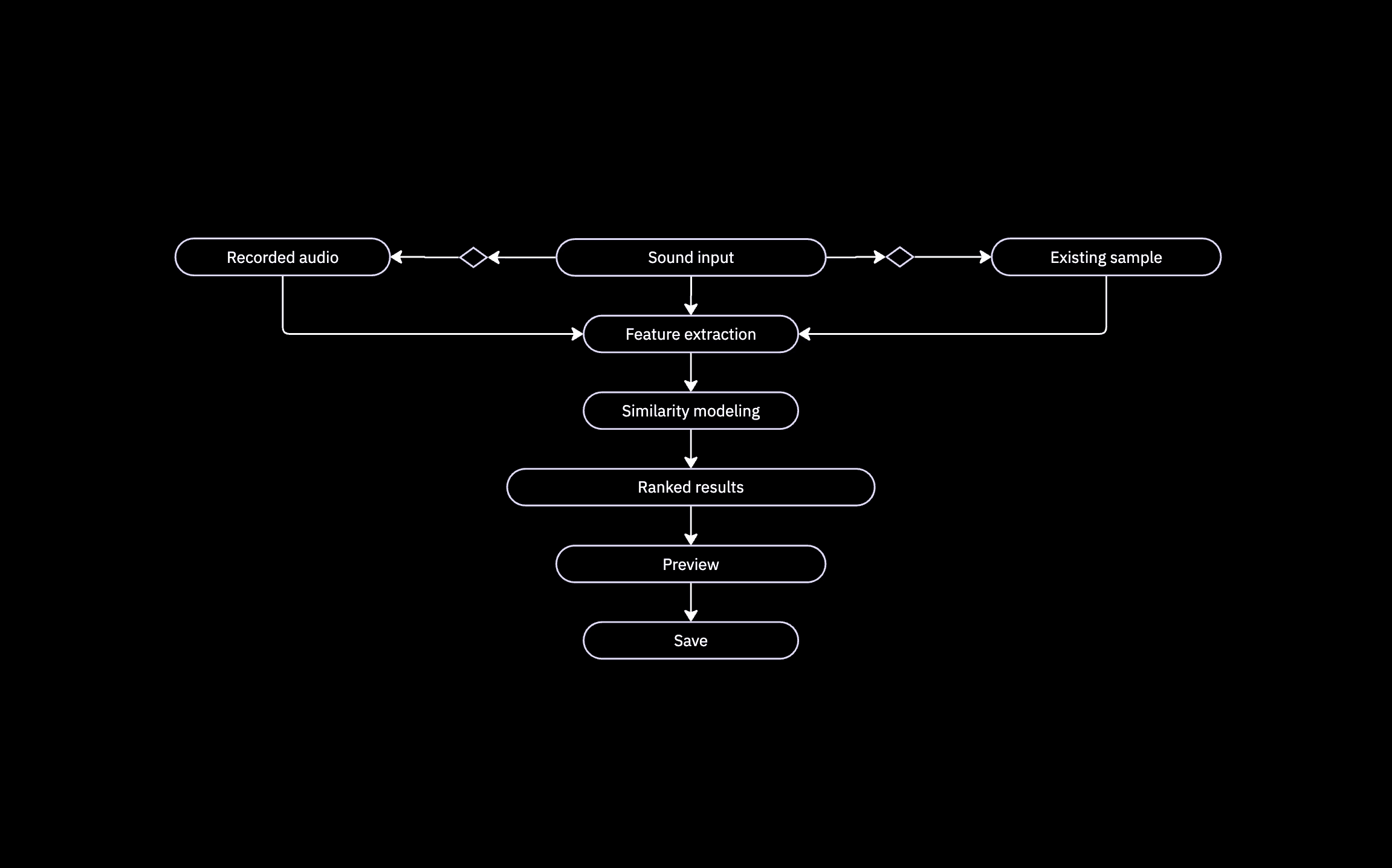

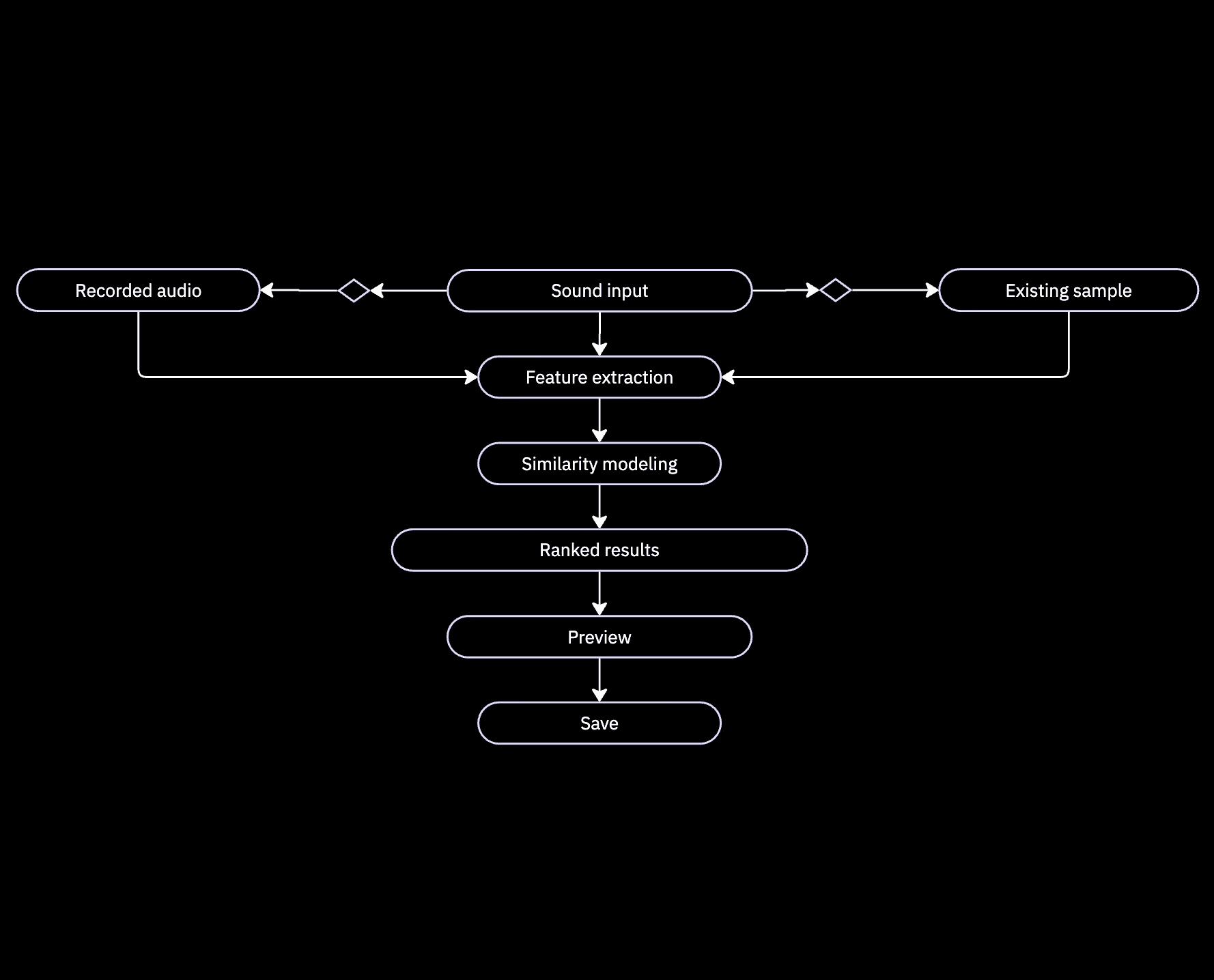

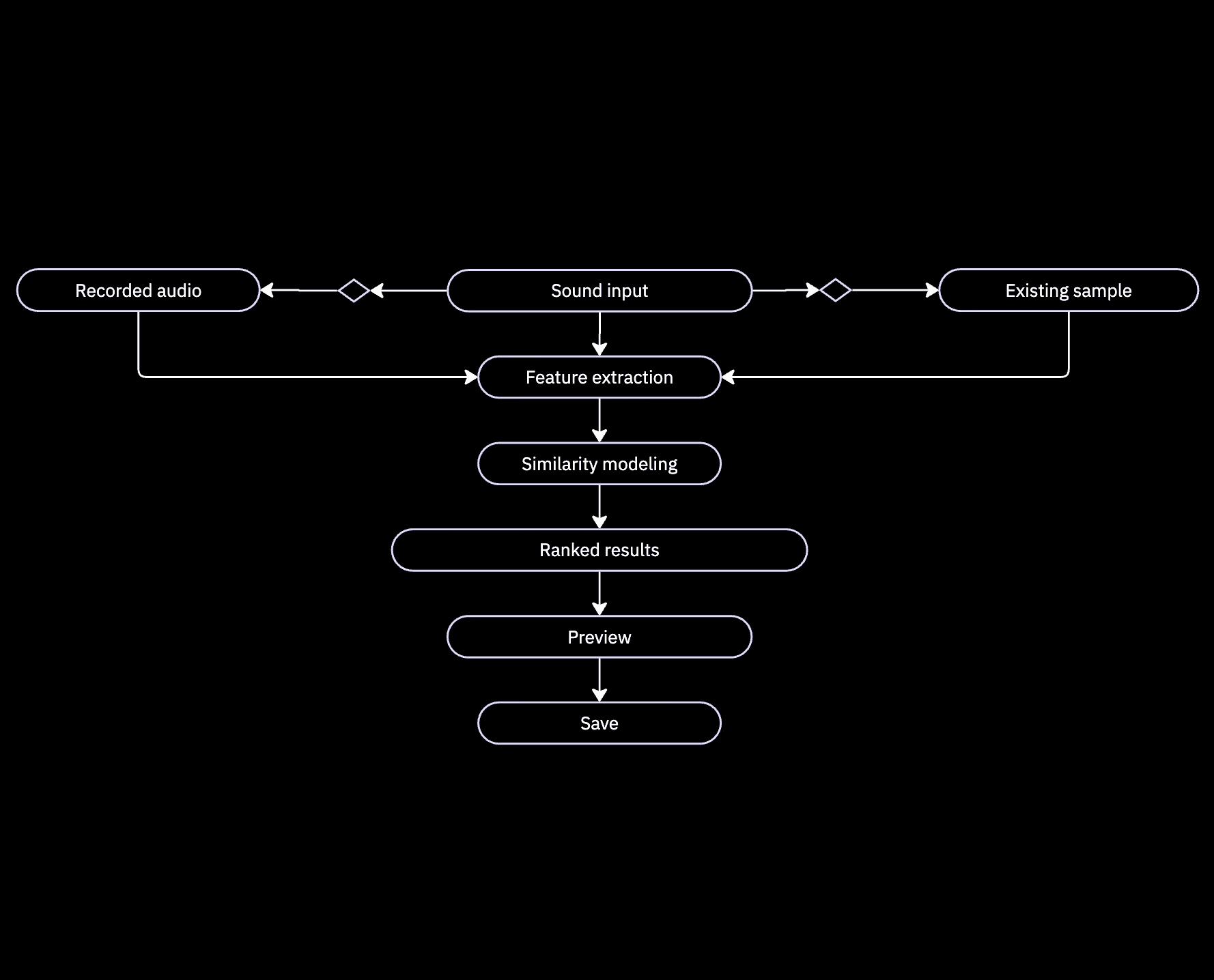

Analyze mode lets users start with any sound for discovery. Instead of browsing libraries, producers can input audio directly. They then get musically compatible options based on spectral balance, rhythm, and harmonic structure.

Remix mode lets you explore ideas in new ways. Instead of just replacing sounds, producers create variations. These variations keep the musical identity while changing timing, rhythm, and phrasing.

Radio mode helps producers when they lack a clear direction but still want to create.

The system streams compatible sounds based on listening habits and production history. Discovery happens passively, with no filters, categories, or manual input.

This method lets you iterate in the same creative space. So, you don't have to restart your search for new ideas during a session.

Radio mode takes away decision-making during discovery.

Simple actions like Like or Skip change the preference model instantly. This shapes what plays next and helps you stay focused on listening instead of the interface.

Dec 2025 - Feb 2026

Timeline

Product design (end-to-end experiences)

UX strategy & research

Interaction & flow design

Visual & UI design (mobile & responsive)

Design systems

AI-assisted experience design

Scope

Tools

Figma

Adobe CS

Miro

Tools

Create a discovery experience where producers can engage directly with sound. This way, they reduce translation, decision fatigue, and interruptions while making music.

Opportunity

During active production sessions, creators often switch between tasks. They listen to audio, browse folders, apply filters, and turn sounds into keywords. This constant shifting can disrupt their creative flow and slow down progress.

Challenge

Challenge

Opportunity

Initial Problem Discovery

Sound-to-Sound Discovery

Remix Mode

Radio Mode

Key Insights & Strategic Pivot

The Problem

The Principle

The Pivot

Producers need to turn sound ideas into words when browsing folders and metadata. This process can disrupt their creative flow.

Music discovery should focus on the sound itself. It shouldn't ask users to describe what they hear.

Change how we find things. Move from using tags, filters, and categories to focusing on audio. Let’s base our interaction on sound similarity.

RESEARCH

Competitive Landscape

Observed Industry Patterns

Identified Opportunity

Strategic Position

Strategic Takeaway

LoopHaven works as a connected system across mobile, desktop, and plugin platforms.

All discovery actions feed into a shared preference model stored in the cloud. When a sound is saved on any device, it’s instantly available in the LoopHaven VST plugin. This means no manual downloads, file management, or importing workflows are needed.

This setup lets discovery happen anywhere while keeping usage focused on the production environment.

Ecosystem Architecture

Design Constraints & Trade-offs

The gap between hearing an idea and using a sound feels broken.

Radio mode takes away decision-making during discovery.

Simple actions like Like or Skip change the preference model instantly. This shapes what plays next and helps you stay focused on listening instead of the interface.

Analyze mode lets users start with any sound for discovery. Instead of browsing libraries, producers can input audio directly. They then get musically compatible options based on spectral balance, rhythm, and harmonic structure.

Sound-to-Sound Discovery

The Problem

The Principle

The Pivot

Producers need to turn sound ideas into words when browsing folders and metadata. This process can disrupt their creative flow.

Change how we find things. Move from using tags, filters, and categories to focusing on audio. Let’s base our interaction on sound similarity.

Music discovery should focus on the sound itself. It shouldn't ask users to describe what they hear.

The gap between hearing an idea and using a sound feels broken.

When viewed holistically, the market reveals a shared gap:

Across platforms, several consistent behaviors emerged:

Discovery mainly relies on text-based metadata like tags, filters, and folders.

Musical intent often shows through language instead of sound.

Discovery, analysis, and usage usually happen in different environments.

File-based workflows dominate how sounds move into the DAW.

Learning systems, when present, are often limited to browsing behavior, not production context.

During active production sessions, creators frequently switch contexts between listening, browsing folders, applying filters, and translating auditory intent into keywords. This cognitive overhead disrupts creative flow and slows iteration.

Opportunity

Product design (end-to-end experiences)

UX strategy & research

Interaction & flow design

Visual & UI design (mobile & responsive)

Design systems

AI-assisted experience design

Scope

Dec 2025 - Feb 2026

Timeline

Create a discovery experience that allows producers to interact directly with sound itself, minimizing translation, decision fatigue, and interruption during music creation.

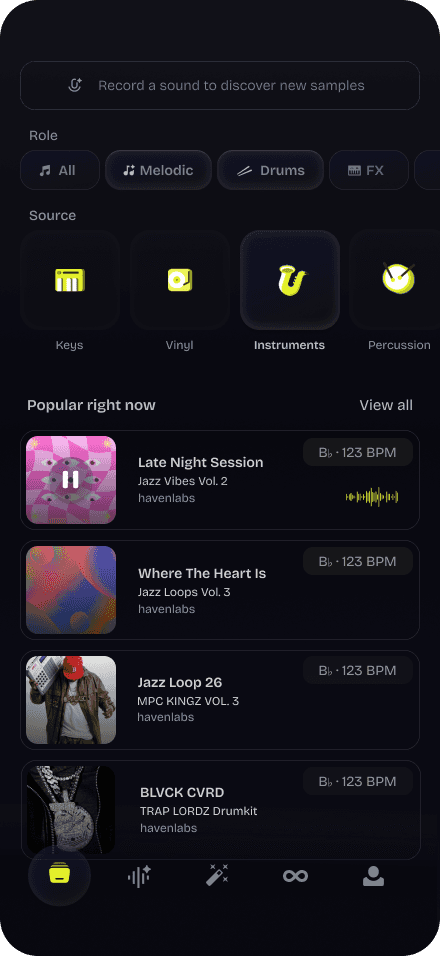

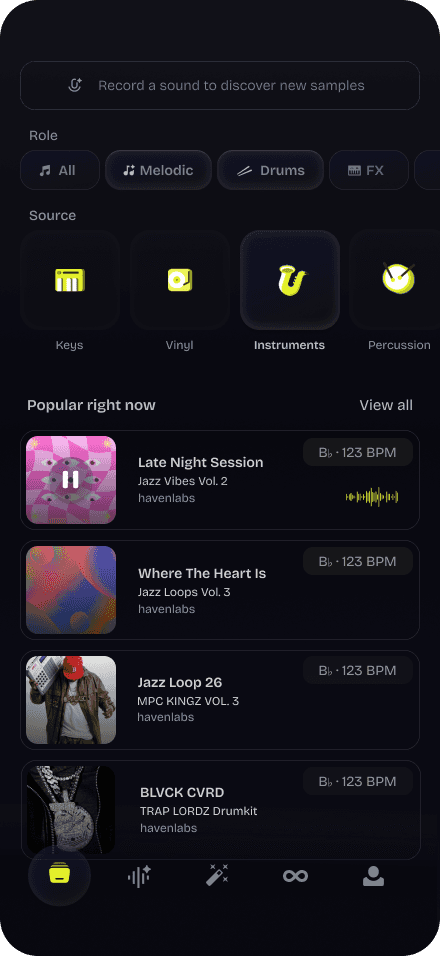

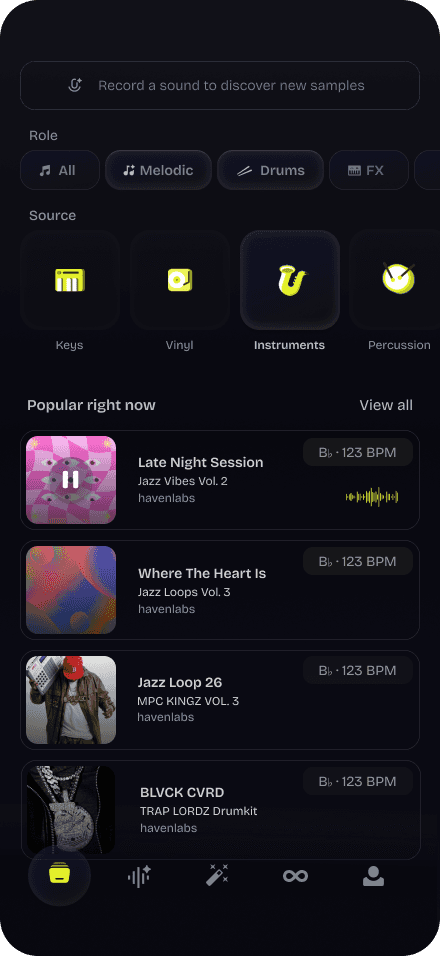

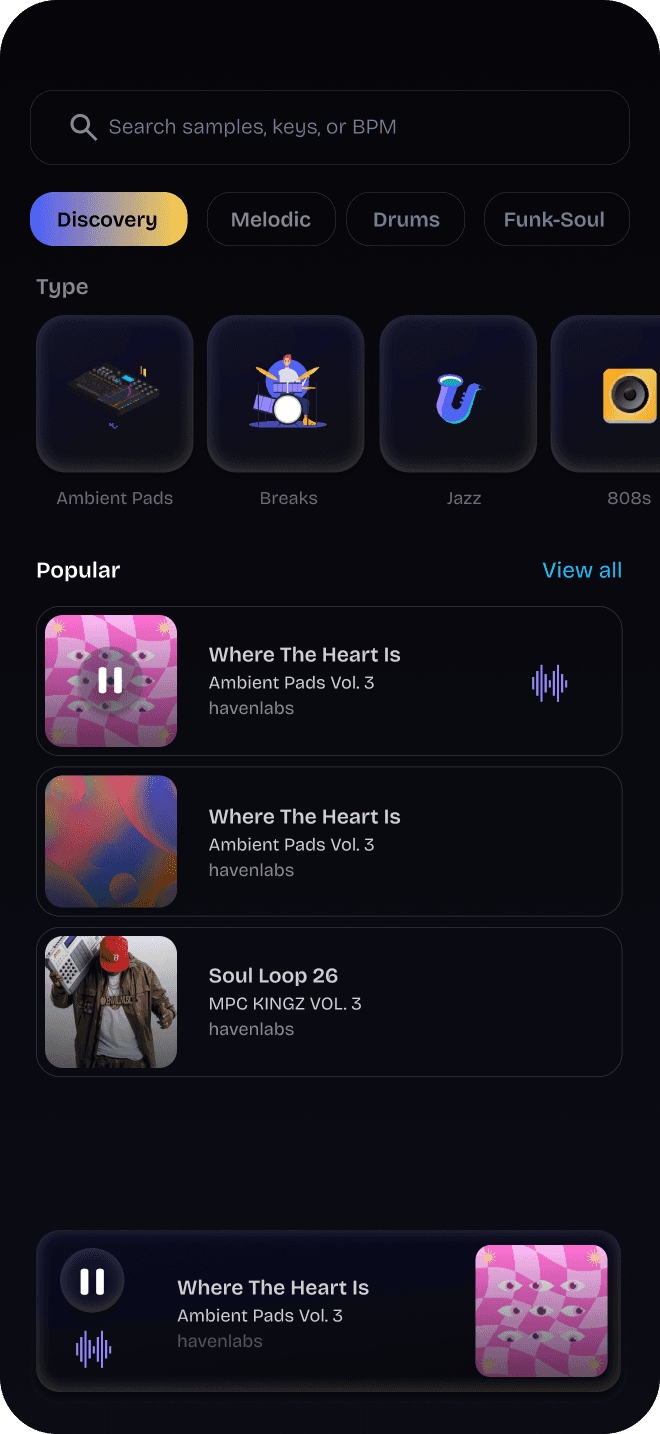

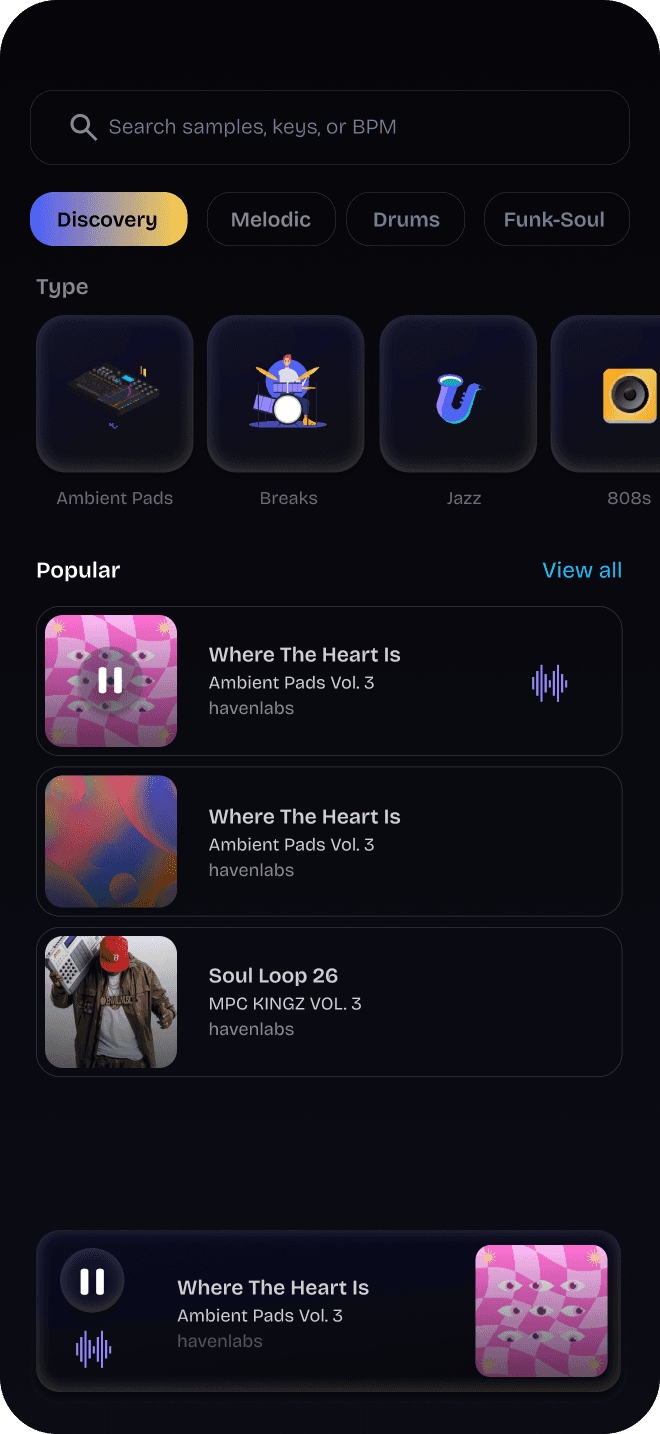

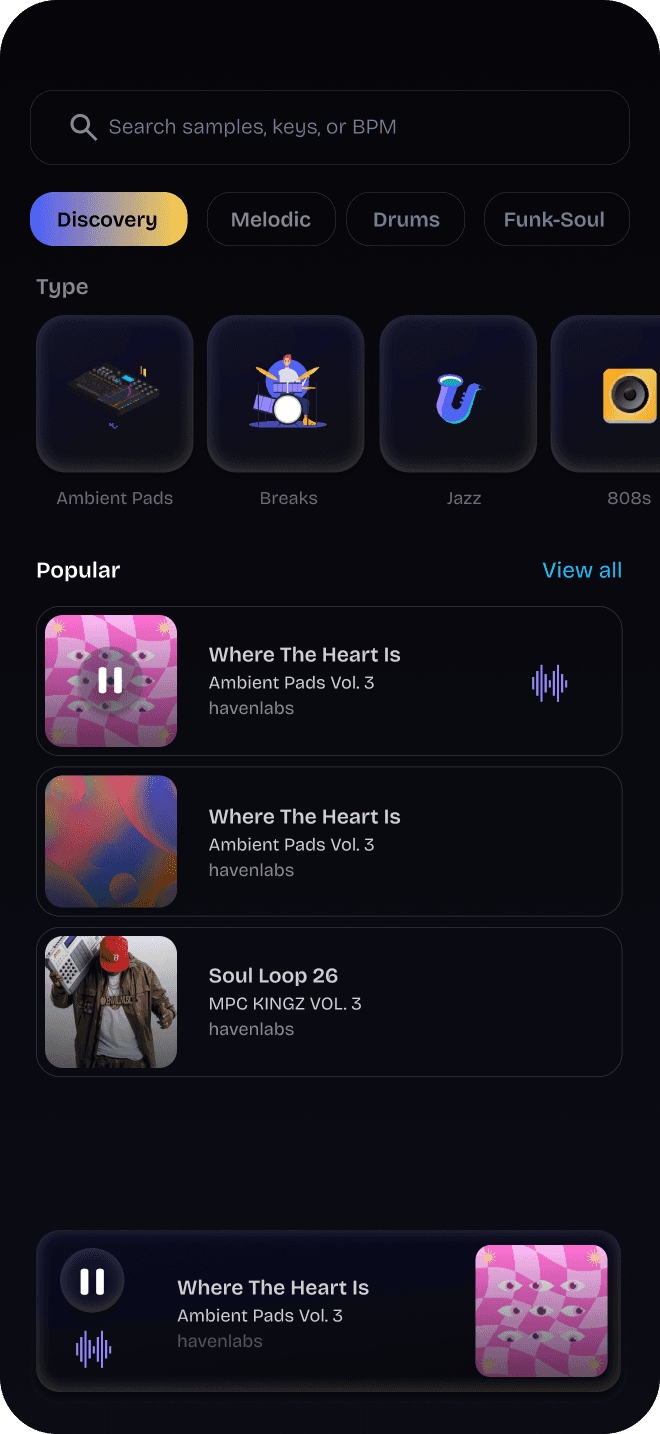

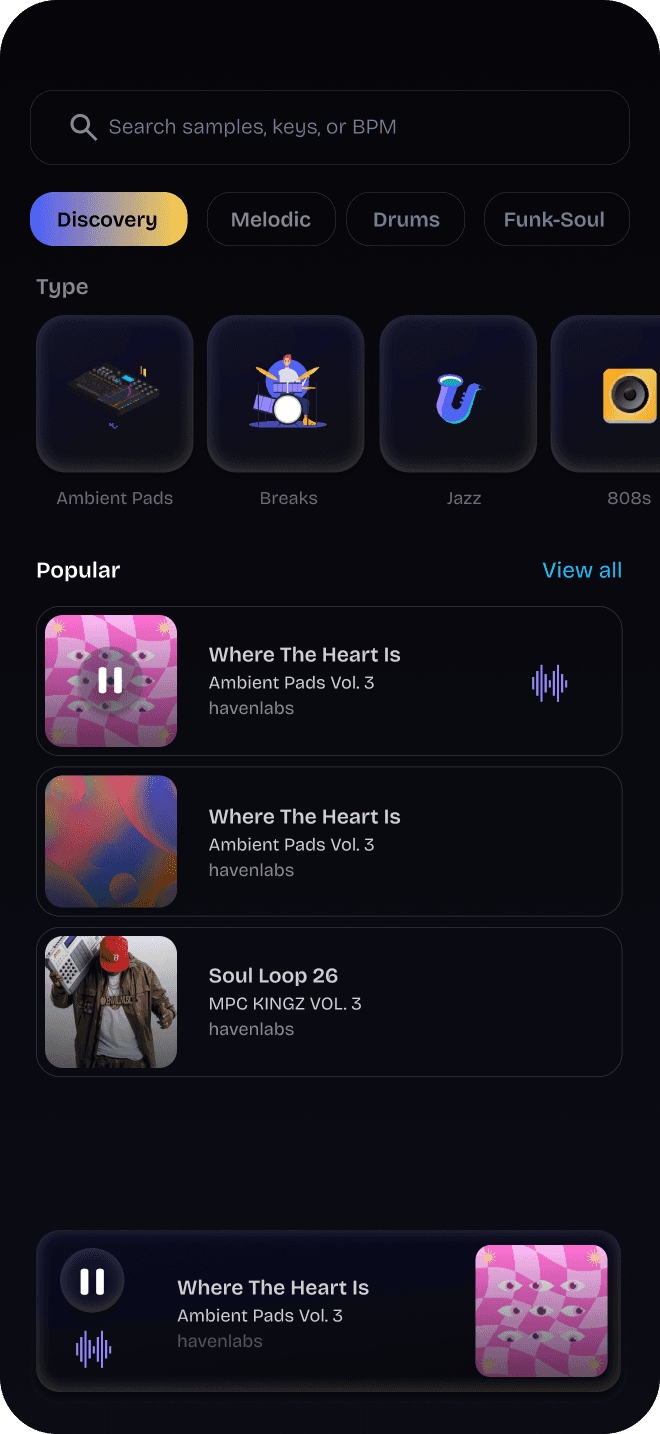

The initial focus was on improving traditional sample discovery. It used familiar search and browsing patterns.

Discovery relied on keywords, BPM, tags, and category navigation. This mirrored how sample libraries organize large sound collections.

This approach enhanced visual clarity and sped up navigation. However, producers still had to pause playback. They needed to translate what they heard into descriptive terms.

Early findings revealed that the main issue was not the interface quality, but the discovery model itself.

Even with upgrades, searching remained a visual and cognitive task, separate from listening. This led to interruptions during delicate creative moments.

This insight led to a shift from metadata-driven browsing to an audio-first, sound-to-sound discovery system.

Early Direction: Search-Driven Discovery

The initial focus was on improving traditional sample discovery. It used familiar search and browsing patterns.

Discovery relied on keywords, BPM, tags, and category navigation. This mirrored how sample libraries organize large sound collections.

This approach enhanced visual clarity and sped up navigation. However, producers still had to pause playback. They needed to translate what they heard into descriptive terms.

Early findings revealed that the main issue was not the interface quality, but the discovery model itself.

Even with upgrades, searching remained a visual and cognitive task, separate from listening. This led to interruptions during delicate creative moments.

This insight led to a shift from metadata-driven browsing to an audio-first, sound-to-sound discovery system.

Early Direction: Metadata-Based Discovery

Success is how fast producers return to creation.

Primary Metrics

Time from initial sound input to usable VST-ready sample.

Secondary Signals

Discovery-to-use conversion rate - Saved sounds used in projects - Session interruption frequency

Speed over precision:

Similarity results focus on quick auditioning instead of perfect matches. Creative momentum is favored over technical accuracy.

Reduced parameters

Advanced filtering is kept minimal to avoid decision fatigue during active sessions.

Controlled exploration

Radio mode balances relevance and novelty, preventing over-personalization and repetitive output.

If I had more time, I would test the experience with more producers. I would see how discovery fits into real production sessions.

With those insights, I would improve interaction clarity, onboarding cues, and feedback states. This way, users would feel confident exploring new sounds.

The focus would stay on reducing friction and supporting creative flow during the discovery process.

Measuring Success

Final Reflection

Success is how fast producers return to creation.

Primary Metrics

Time from initial sound input to usable VST-ready sample.

Secondary Signals

Discovery-to-use conversion rate - Saved sounds used in projects - Session interruption frequency

Speed over precision:

Similarity results focus on quick auditioning instead of perfect matches. Creative momentum is favored over technical accuracy.

Reduced parameters

Advanced filtering is kept minimal to avoid decision fatigue during active sessions.

Controlled exploration

Radio mode balances relevance and novelty, preventing over-personalization and repetitive output.

Challenge

Producers often need to:

pause playback

leave the DAW

browse external tools

audition unrelated samples

manually import files

Each step disrupts creative flow when momentum is delicate.

Strategic Position

Strategic Takeaway

LoopHaven works as a connected system across mobile, desktop, and plugin platforms.

All discovery actions feed into a shared preference model stored in the cloud. When a sound is saved on any device, it’s instantly available in the LoopHaven VST plugin. This means no manual downloads, file management, or importing workflows are needed.

This setup lets discovery happen anywhere while keeping usage focused on the production environment.

Producers often need to:

pause playback

leave the DAW

browse external tools

audition unrelated samples

manually import files

Each step disrupts creative flow when momentum is delicate.

Discovery starts with listening, not searching. This allows for quick exploration without using keywords, filters, or manual browsing.

Radio Mode

Remix Mode

To better understand how producers currently discover and use sounds, we reviewed workflows across established platforms and emerging tools, including:

The goal was not to evaluate individual products, but to identify recurring patterns across the ecosystem.

To understand how producers find and use sounds, we looked at workflows on established platforms and new tools, including:

We aimed to spot common patterns in the ecosystem, not to assess individual products.

Analyze mode lets users start with any sound for discovery. Instead of browsing libraries, producers can input audio directly. They then get musically compatible options based on spectral balance, rhythm, and harmonic structure.

Instead of replacing tools or competing on library size, it focuses on:

audio-first discovery, not keyword search

musical compatibility, not categorical similarity

continuous flow, not discrete steps

cloud-synced usage, not file management

Its role is to connect discovery and production intelligently.

Final Reflection

Figma

Miro

Adobe CS

Tools

To understand how producers find and use sounds, we looked at workflows on established platforms and new tools, including:

We aimed to spot common patterns in the ecosystem, not to assess individual products.

Early exploration looked at how producers find sounds while working, not how tools think they should search.

Research showed that discovery often occurs during composition. At this stage, creative intent is flexible and hard to express. Keyword searches, filters, and folders need clear intent when producers are thinking intuitively.

This gap between how producers create and how traditional tools work often leads to stalled sessions, abandoned ideas, and extra mental strain.

The initial focus was on improving traditional sample discovery. It used familiar search and browsing patterns.

Discovery relied on keywords, BPM, tags, and category navigation. This mirrored how sample libraries organize large sound collections.

This approach enhanced visual clarity and sped up navigation. However, producers still had to pause playback. They needed to translate what they heard into descriptive terms.

Early findings revealed that the main issue was not the interface quality, but the discovery model itself.

Even with upgrades, searching remained a visual and cognitive task, separate from listening. This led to interruptions during delicate creative moments.

This insight led to a shift from metadata-driven browsing to an audio-first, sound-to-sound discovery system.

Early Direction: Metadata-Based Discovery

To assess the direction before system expansion, I validated the core interaction model. This involved early concept testing and internal critique sessions with producers and designers.

What was tested:

Sound-to-sound discovery versus traditional tag-based browsing.

Time to first usable sound from a cold start.

Clarity of AI output without needing technical explanations.

Key signals observed:

Producers preferred discovering sounds through audio instead of filters or keywords.

Participants found the experience “faster” and “less interruptive” than browsing folders.

The AI overview worked best when using musical terms (like key, tempo, and role) instead of abstract ML language.

Resulting iterations:

Reduced visible filters in early discovery stages.

Focused on harmonic compatibility instead of exact similarity scoring.

Introduced the AI Overview card to build trust without overwhelming users.

These insights reinforced the core hypothesis: momentum is more important than precision during active music creation.

Remix Mode

Discovery starts with listening, not searching. This allows for quick exploration without using keywords, filters, or manual browsing.

Radio mode is designed for moments when producers do not yet have a clear direction — only the desire to keep creating.

The system plays a continuous stream of musically compatible sounds based on listening behavior and production history. Discovery occurs passively, without filters, categories, or manual input.

Radio mode helps producers when they lack a clear direction but still want to create.

The system streams compatible sounds based on listening habits and production history. Discovery happens passively, with no filters, categories, or manual input.

The opportunity came from looking at how producers use different tools, not just individual platforms.

LoopHaven tackles friction at the system level, where discovery, intelligence, and execution meet.

Instead of replacing tools or competing on library size, it focuses on:

audio-first discovery, not keyword search

musical compatibility, not categorical similarity

continuous flow, not discrete steps

cloud-synced usage, not file management

Its role is to connect discovery and production intelligently.

Success is how fast producers return to creation.

Primary Metrics

Time from initial sound input to usable VST-ready sample.

Secondary Signals

Discovery-to-use conversion rate - Saved sounds used in projects - Session interruption frequency

Producers often need to:

pause playback

leave the DAW

browse external tools

audition unrelated samples

manually import files

Each step disrupts creative flow when momentum is delicate.

If I had more time, I would test the experience with more producers. I would see how discovery fits into real production sessions.

With those insights, I would improve interaction clarity, onboarding cues, and feedback states. This way, users would feel confident exploring new sounds.

The focus would stay on reducing friction and supporting creative flow during the discovery process.